Virtualization Guide

- Preface

- I Introduction

- II Managing virtual machines with

libvirt- 7

libvirtdaemons - 8 Preparing the VM Host Server

- 9 Guest installation

- 10 Basic VM Guest management

- 11 Connecting and authorizing

- 12 Advanced storage topics

- 13 Configuring virtual machines with Virtual Machine Manager

- 14 Configuring virtual machines with

virsh - 15 Enhancing virtual machine security with AMD SEV-SNP

- 16 Migrating VM Guests

- 17 Xen to KVM migration guide

- 7

- III Hypervisor-independent features

- IV Managing virtual machines with Xen

- 24 Setting up a virtual machine host

- 25 Virtual networking

- 26 Managing a virtualization environment

- 27 Block devices in Xen

- 28 Virtualization: configuration options and settings

- 29 Administrative tasks

- 30 XenStore: configuration database shared between domains

- 31 Xen as a high-availability virtualization host

- 32 Xen: converting a paravirtual (PV) guest into a fully virtual (FV/HVM) guest

- V Managing virtual machines with QEMU

- VI Troubleshooting

- Glossary

- A Configuring GPU Pass-Through for NVIDIA cards

- B GNU licenses

36 Running virtual machines with qemu-system-ARCH #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running

| Revision History | |

|---|---|

| 2024-06-27 | |

Once you have a virtual disk image ready (for more information on disk

images, see Section 35.2, “Managing disk images with qemu-img”), it is time to

start the related virtual machine.

Section 35.1, “Basic installation with qemu-system-ARCH” introduced simple commands

to install and run a VM Guest. This chapter focuses on a more detailed

explanation of qemu-system-ARCH usage, and shows

solutions for more specific tasks. For a complete list of

qemu-system-ARCH's options, see its man page

(man 1 qemu).

36.1 Basic qemu-system-ARCH invocation #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running-basic

The qemu-system-ARCH command uses the following

syntax:

qemu-system-ARCH OPTIONS1 -drive file=DISK_IMAGE2

| |

Path to the disk image holding the guest system you want to

virtualize. |

Important: AArch64 architecture

KVM support is available only for 64-bit Arm® architecture (AArch64). Running QEMU on the AArch64 architecture requires you to specify:

A machine type designed for QEMU Arm® virtual machines using the

-machine virt-VERSION_NUMBERoption.A firmware image file using the

-biosoption.You can specify the firmware image files alternatively using the

-driveoptions, for example:-drive file=/usr/share/edk2/aarch64/QEMU_EFI-pflash.raw,if=pflash,format=raw -drive file=/var/lib/libvirt/qemu/nvram/opensuse_VARS.fd,if=pflash,format=raw

A CPU of the VM Host Server using the

-cpu hostoption (default iscortex-15).The same Generic Interrupt Controller (GIC) version as the host using the

-machine gic-version=hostoption (default is2).If a graphic mode is needed, a graphic device of type

virtio-gpu-pci.

For example:

>sudoqemu-system-aarch64 [...] \ -bios /usr/share/qemu/qemu-uefi-aarch64.bin \ -cpu host \ -device virtio-gpu-pci \ -machine virt,accel=kvm,gic-version=host

36.2 General qemu-system-ARCH options #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running-gen-opts

This section introduces general qemu-system-ARCH

options and options related to the basic emulated hardware, such as the

virtual machine's processor, memory, model type, or time processing

methods.

-name NAME_OF_GUESTSpecifies the name of the running guest system. The name is displayed in the window caption and used for the VNC server.

-boot OPTIONSSpecifies the order in which the defined drives are booted. Drives are represented by letters, where

aandbstand for the floppy drives 1 and 2,cstands for the first hard disk,dstands for the first CD-ROM drive, andntopstand for Ether-boot network adapters.For example,

qemu-system-ARCH [...] -boot order=ndcfirst tries to boot from the network, then from the first CD-ROM drive, and finally from the first hard disk.-pidfile FILENAMEStores the QEMU's process identification number (PID) in a file. This is useful if you run QEMU from a script.

-nodefaultsBy default QEMU creates basic virtual devices even if you do not specify them on the command line. This option turns this feature off, and you must specify every single device manually, including graphical and network cards, parallel or serial ports, or virtual consoles. Even QEMU monitor is not attached by default.

-daemonize“Daemonizes” the QEMU process after it is started. QEMU detaches from the standard input and standard output after it is ready to receive connections on any of its devices.

Note: SeaBIOS BIOS implementation

SeaBIOS is the default BIOS used. You can boot USB devices, any drive (CD-ROM, Floppy or a hard disk). It has USB mouse and keyboard support and supports multiple VGA cards. For more information about SeaBIOS, refer to the SeaBIOS Website.

36.2.1 Basic virtual hardware #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running-gen-opts-basic

36.2.1.1 Machine type #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: sec-qemu-running-gen-opts-machine

You can specify the type of the emulated machine. Run

qemu-system-ARCH -M help to view a list of

supported machine types.

Note: ISA-PC

The machine type isapc: ISA-only-PC is unsupported.

36.2.1.2 CPU model #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running-gen-opts-basic-cpu

To specify the type of the processor (CPU) model, run

qemu-system-ARCH -cpu

MODEL. Use qemu-system-ARCH -cpu

help to view a list of supported CPU models.

36.2.1.3 Other basic options #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running-gen-opts-basic-others

The following is a list of most commonly used options while launching qemu from command line. To see all options available refer to qemu-doc man page.

-m MEGABYTESSpecifies how many megabytes are used for the virtual RAM size.

-balloon virtioSpecifies a paravirtualized device to dynamically change the amount of virtual RAM assigned to VM Guest. The top limit is the amount of memory specified with

-m.-smp NUMBER_OF_CPUSSpecifies how many CPUs to emulate. QEMU supports up to 255 CPUs on the PC platform (up to 64 with KVM acceleration used). This option also takes other CPU-related parameters, such as number of sockets, number of cores per socket, or number of threads per core.

The following is an example of a working

qemu-system-ARCH command line:

>sudoqemu-system-x86_64 \ -name "SLES 15.7" \ -M pc-i440fx-2.7 -m 512 \ -machine accel=kvm -cpu kvm64 -smp 2 \ -drive format=raw,file=/images/sles.raw

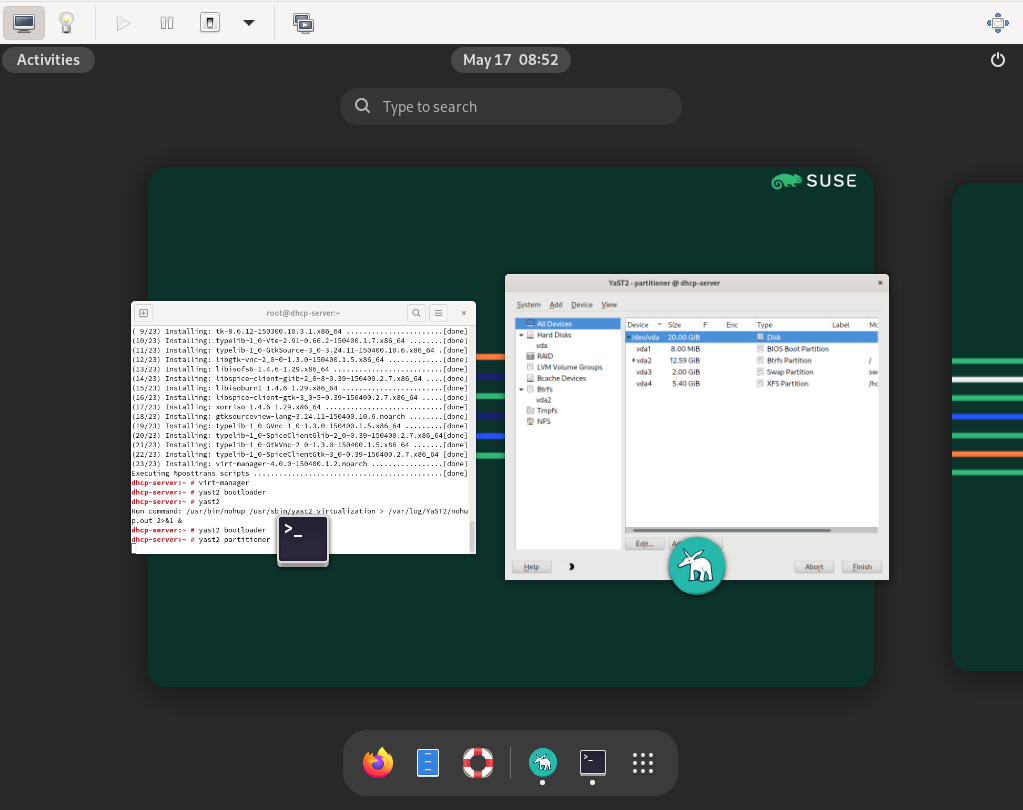

Figure 36.1: QEMU window with SLES as VM Guest #

-no-acpiDisables ACPI support.

-SQEMU starts with CPU stopped. To start CPU, enter

cin QEMU monitor. For more information, see Chapter 37, Virtual machine administration using QEMU monitor.

36.2.2 Storing and reading configuration of virtual devices #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running-gen-opts-storing-cong

-readconfig CFG_FILEInstead of entering the devices configuration options on the command line each time you want to run VM Guest,

qemu-system-ARCHcan read it from a file that was either previously saved with-writeconfigor edited manually.-writeconfig CFG_FILEDumps the current virtual machine's devices configuration to a text file. It can be consequently re-used with the

-readconfigoption.>sudoqemu-system-x86_64 -name "SLES 15.7" \ -machine accel=kvm -M pc-i440fx-2.7 -m 512 -cpu kvm64 \ -smp 2 /images/sles.raw -writeconfig /images/sles.cfg (exited)>cat /images/sles.cfg # qemu config file [drive] index = "0" media = "disk" file = "/images/sles_base.raw"This way you can effectively manage the configuration of your virtual machines' devices in a well-arranged way.

36.2.3 Guest real-time clock #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running-gen-opts-rtc

-rtc OPTIONSSpecifies the way the RTC is handled inside a VM Guest. By default, the clock of the guest is derived from that of the host system. Therefore, it is recommended that the host system clock is synchronized with an accurate external clock, for example, via NTP service.

If you need to isolate the VM Guest clock from the host one, specify

clock=vminstead of the defaultclock=host.You can also specify the initial time of the VM Guest's clock with the

baseoption:>sudoqemu-system-x86_64 [...] -rtc clock=vm,base=2010-12-03T01:02:00Instead of a time stamp, you can specify

utcorlocaltime. The former instructs VM Guest to start at the current UTC value (Coordinated Universal Time, see https://en.wikipedia.org/wiki/UTC), while the latter applies the local time setting.

36.3 Using devices in QEMU #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running-devices

QEMU virtual machines emulate all devices needed to run a VM Guest. QEMU supports, for example, several types of network cards, block devices (hard and removable drives), USB devices, character devices (serial and parallel ports), or multimedia devices (graphic and sound cards). This section introduces options to configure multiple types of supported devices.

Tip

If your device, such as -drive, needs a special

driver and driver properties to be set, specify them with the

-device option, and identify with

drive= suboption. For example:

>sudoqemu-system-x86_64 [...] -drive if=none,id=drive0,format=raw \ -device virtio-blk-pci,drive=drive0,scsi=off ...

To get help on available drivers and their properties, use

-device ? and -device

DRIVER,?.

36.3.1 Block devices #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running-devices-block

Block devices are vital for virtual machines. These are fixed or removable storage media called drives. One of the connected hard disks typically holds the guest operating system to be virtualized.

Virtual Machine drives are defined with

-drive. This option has many sub-options, so me of

which are described in this section. For the complete list, see the

man page (man 1 qemu).

Sub-options for the -drive option #

file=image_fnameSpecifies the path to the disk image that to be used with this drive. If not specified, an empty (removable) drive is assumed.

if=drive_interfaceSpecifies the type of interface to which the drive is connected. Currently only

floppy,scsi,ide, orvirtioare supported by SUSE.virtiodefines a paravirtualized disk driver. Default iside.index=index_of_connectorSpecifies the index number of a connector on the disk interface (see the

ifoption) where the drive is connected. If not specified, the index is automatically incremented.media=typeSpecifies the type of media. Can be

diskfor hard disks, orcdromfor removable CD-ROM drives.format=img_fmtSpecifies the format of the connected disk image. If not specified, the format is autodetected. Currently, SUSE supports

rawandqcow2formats.cache=methodSpecifies the caching method for the drive. Possible values are

unsafe,writethrough,writeback,directsync, ornone. To improve performance when using theqcow2image format, selectwriteback.nonedisables the host page cache and, therefore, is the safest option. Default for image files iswriteback. For more information, see Chapter 18, Disk cache modes.

Tip

To simplify defining block devices, QEMU understands several

shortcuts which you may find handy when entering the

qemu-system-ARCH command line.

You can use

>sudoqemu-system-x86_64 -cdrom /images/cdrom.iso

instead of

>sudoqemu-system-x86_64 -drive format=raw,file=/images/cdrom.iso,index=2,media=cdrom

and

>sudoqemu-system-x86_64 -hda /images/imagei1.raw -hdb /images/image2.raw -hdc \ /images/image3.raw -hdd /images/image4.raw

instead of

>sudoqemu-system-x86_64 -drive format=raw,file=/images/image1.raw,index=0,media=disk \ -drive format=raw,file=/images/image2.raw,index=1,media=disk \ -drive format=raw,file=/images/image3.raw,index=2,media=disk \ -drive format=raw,file=/images/image4.raw,index=3,media=disk

Tip: Using host drives instead of images

As an alternative to using disk images (see

Section 35.2, “Managing disk images with qemu-img”) you can also use

existing VM Host Server disks, connect them as drives, and access them from

VM Guest. Use the host disk device directly instead of disk image

file names.

To access the host CD-ROM drive, use

>sudoqemu-system-x86_64 [...] -drive file=/dev/cdrom,media=cdrom

To access the host hard disk, use

>sudoqemu-system-x86_64 [...] -drive file=/dev/hdb,media=disk

A host drive used by a VM Guest must not be accessed concurrently by the VM Host Server or another VM Guest.

36.3.1.1 Freeing unused guest disk space #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: kvm-freedisk

A Sparse image file is a type of disk image file that grows in size as the user adds data to it, taking up only as much disk space as is stored in it. For example, if you copy 1 GB of data inside the sparse disk image, its size grows by 1 GB. If you then delete, for example, 500 MB of the data, the image size does not by default decrease as expected.

This is why the discard=on option is introduced on

the KVM command line. It tells the hypervisor to automatically free

the “holes” after deleting data from the sparse guest

image. This option is valid only for the

if=scsi drive interface:

>sudoqemu-system-x86_64 [...] -drive format=img_format,file=/path/to/file.img,if=scsi,discard=on

Important: Support status

if=scsi is not supported. This interface does not

map to virtio-scsi, but rather to the

lsi SCSI adapter.

36.3.1.2 IOThreads #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: kvm-iothreads

IOThreads are dedicated event loop threads for virtio devices to perform I/O requests to improve scalability, especially on an SMP VM Host Server with SMP VM Guests using many disk devices. Instead of using QEMU's main event loop for I/O processing, IOThreads allow spreading I/O work across multiple CPUs and can improve latency when properly configured.

IOThreads are enabled by defining IOThread objects. virtio devices

can then use the objects for their I/O event loops. Many virtio

devices can use a single IOThread object, or virtio devices and

IOThread objects can be configured in a 1:1 mapping. The following

example creates a single IOThread with ID

iothread0 which is then used as the event loop for

two virtio-blk devices.

>sudoqemu-system-x86_64 [...] -object iothread,id=iothread0\ -drive if=none,id=drive0,cache=none,aio=native,\ format=raw,file=filename -device virtio-blk-pci,drive=drive0,scsi=off,\ iothread=iothread0 -drive if=none,id=drive1,cache=none,aio=native,\ format=raw,file=filename -device virtio-blk-pci,drive=drive1,scsi=off,\ iothread=iothread0 [...]

The following qemu command line example illustrates a 1:1 virtio device to IOThread mapping:

>sudoqemu-system-x86_64 [...] -object iothread,id=iothread0\ -object iothread,id=iothread1 -drive if=none,id=drive0,cache=none,aio=native,\ format=raw,file=filename -device virtio-blk-pci,drive=drive0,scsi=off,\ iothread=iothread0 -drive if=none,id=drive1,cache=none,aio=native,\ format=raw,file=filename -device virtio-blk-pci,drive=drive1,scsi=off,\ iothread=iothread1 [...]

36.3.1.3 Bio-based I/O path for virtio-blk #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: kvm-virtio-blk-use-bio

For better performance of I/O-intensive applications, a new I/O path was introduced for the virtio-blk interface in kernel version 3.7. This bio-based block device driver skips the I/O scheduler, and thus shortens the I/O path in guest and has lower latency. It is especially useful for high-speed storage devices, such as SSD disks.

The driver is disabled by default. To use it, do the following:

Append

virtio_blk.use_bio=1to the kernel command line on the guest. You can do so via › › .You can do it also by editing

/etc/default/grub, searching for the line that containsGRUB_CMDLINE_LINUX_DEFAULT=, and adding the kernel parameter at the end. Then rungrub2-mkconfig >/boot/grub2/grub.cfgto update the grub2 boot menu.Reboot the guest with the new kernel command line active.

Tip: Bio-based driver on slow devices

The bio-based virtio-blk driver does not help on slow devices such as spin hard disks. The reason is that the benefit of scheduling is larger than what the shortened bio path offers. Do not use the bio-based driver on slow devices.

36.3.1.4 Accessing iSCSI resources directly #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: sec-qemu-running-devices-block-iscsi

QEMU now integrates with libiscsi. This allows

QEMU to access iSCSI resources directly and use them as virtual

machine block devices. This feature does not require any host iSCSI

initiator configuration, as is needed for a libvirt iSCSI target

based storage pool setup. Instead it directly connects guest storage

interfaces to an iSCSI target LUN via the user space library

libiscsi. iSCSI-based disk devices can also be specified in the

libvirt XML configuration.

Note: RAW image format

This feature is only available using the RAW image format, as the iSCSI protocol has certain technical limitations.

The following is the QEMU command line interface for iSCSI connectivity.

Note: virt-manager limitation

The use of libiscsi based storage provisioning is not yet exposed by the virt-manager interface, but instead it would be configured by directly editing the guest xml. This new way of accessing iSCSI based storage is to be done at the command line.

>sudoqemu-system-x86_64 -machine accel=kvm \ -drive file=iscsi://192.168.100.1:3260/iqn.2016-08.com.example:314605ab-a88e-49af-b4eb-664808a3443b/0,\ format=raw,if=none,id=mydrive,cache=none \ -device ide-hd,bus=ide.0,unit=0,drive=mydrive ...

Here is an example snippet of guest domain xml which uses the protocol based iSCSI:

<devices>

...

<disk type='network' device='disk'>

<driver name='qemu' type='raw'/>

<source protocol='iscsi' name='iqn.2013-07.com.example:iscsi-nopool/2'>

<host name='example.com' port='3260'/>

</source>

<auth username='myuser'>

<secret type='iscsi' usage='libvirtiscsi'/>

</auth>

<target dev='vda' bus='virtio'/>

</disk>

</devices>Contrast that with an example which uses the host based iSCSI initiator which virt-manager sets up:

<devices>

...

<disk type='block' device='disk'>

<driver name='qemu' type='raw' cache='none' io='native'/>

<source dev='/dev/disk/by-path/scsi-0:0:0:0'/>

<target dev='hda' bus='ide'/>

<address type='drive' controller='0' bus='0' target='0' unit='0'/>

</disk>

<controller type='ide' index='0'>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01'

function='0x1'/>

</controller>

</devices>36.3.1.5 Using RADOS block devices with QEMU #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: qemu-devices-block-rbd

RADOS Block Devices (RBD) store data in a Ceph cluster. They allow snapshotting, replication and data consistency. You can use an RBD from your KVM-managed VM Guests similarly to how you use other block devices.

36.3.2 Graphic devices and display options #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running-devices-graphic

This section describes QEMU options affecting the type of the emulated video card and the way VM Guest graphical output is displayed.

36.3.2.1 Defining video cards #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running-devices-graphic-vga

QEMU uses -vga to define a video card used to

display VM Guest graphical output. The -vga

option understands the following values:

noneDisables video cards on VM Guest (no video card is emulated). You can still access the running VM Guest via the serial console.

stdEmulates a standard VESA 2.0 VBE video card. Use it if you intend to use high display resolution on VM Guest.

- qxl

QXL is a paravirtual graphic card. It is VGA compatible (including VESA 2.0 VBE support).

qxlis recommended when using thespicevideo protocol.- virtio

Paravirtual VGA graphic card.

36.3.2.2 Display options #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running-devices-graphic-display

The following options affect the way VM Guest graphical output is displayed.

-display gtkDisplay video output in a GTK window. This interface provides UI elements to configure and control the VM during runtime.

-display sdlDisplay video output via SDL in a separate graphics window. For more information, see the SDL documentation.

-spice option[,option[,...]]Enables the spice remote desktop protocol.

-display vncRefer to Section 36.5, “Viewing a VM Guest with VNC” for more information.

-nographicDisables QEMU's graphical output. The emulated serial port is redirected to the console.

After starting the virtual machine with

-nographic, press Ctrl–A H in the virtual console to view the list of other useful shortcuts, for example, to toggle between the console and the QEMU monitor.>sudoqemu-system-x86_64 -hda /images/sles_base.raw -nographic C-a h print this help C-a x exit emulator C-a s save disk data back to file (if -snapshot) C-a t toggle console timestamps C-a b send break (magic sysrq) C-a c switch between console and monitor C-a C-a sends C-a (pressed C-a c) QEMU 2.3.1 monitor - type 'help' for more information (qemu)-no-frameDisables decorations for the QEMU window. Convenient for dedicated desktop work space.

-full-screenStarts QEMU graphical output in full screen mode.

-no-quitDisables the close button of the QEMU window and prevents it from being closed by force.

-alt-grab,-ctrl-grabBy default, the QEMU window releases the “captured” mouse after pressing Ctrl–Alt. You can change the key combination to either Ctrl–Alt–Shift (

-alt-grab), or the right Ctrl key (-ctrl-grab).

36.3.3 USB devices #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running-devices-usb

There are two ways to create USB devices usable by the VM Guest in

KVM: you can either emulate new USB devices inside a VM Guest, or

assign an existing host USB device to a VM Guest. To use USB devices

in QEMU you first need to enable the generic USB driver with the

-usb option. Then you can specify individual devices

with the -usbdevice option.

36.3.3.1 Emulating USB devices in VM Guest #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: kvm-usb-emulate

SUSE currently supports the following types of USB devices:

disk, host,

serial, braille,

net, mouse, and

tablet.

Types of USB devices for the -usbdevice option #

diskEmulates a mass storage device based on file. The optional

formatoption is used rather than detecting the format.>sudoqemu-system-x86_64 [...] -usbdevice disk:format=raw:/virt/usb_disk.rawhostPass through the host device (identified by bus.addr).

serialSerial converter to a host character device.

brailleEmulates a braille device using BrlAPI to display the braille output.

netEmulates a network adapter that supports CDC Ethernet and RNDIS protocols.

mouseEmulates a virtual USB mouse. This option overrides the default PS/2 mouse emulation. The following example shows the hardware status of a mouse on VM Guest started with

qemu-system-ARCH [...] -usbdevice mouse:>sudohwinfo --mouse 20: USB 00.0: 10503 USB Mouse [Created at usb.122] UDI: /org/freedesktop/Hal/devices/usb_device_627_1_1_if0 [...] Hardware Class: mouse Model: "Adomax QEMU USB Mouse" Hotplug: USB Vendor: usb 0x0627 "Adomax Technology Co., Ltd" Device: usb 0x0001 "QEMU USB Mouse" [...]tabletEmulates a pointer device that uses absolute coordinates (such as touchscreen). This option overrides the default PS/2 mouse emulation. The tablet device is useful if you are viewing VM Guest via the VNC protocol. See Section 36.5, “Viewing a VM Guest with VNC” for more information.

36.3.4 Character devices #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running-devices-char

Use -chardev to create a new character device. The

option uses the following general syntax:

qemu-system-x86_64 [...] -chardev BACKEND_TYPE,id=ID_STRING

where BACKEND_TYPE can be one of

null, socket,

udp, msmouse,

vc, file,

pipe, console,

serial, pty,

stdio, braille,

tty, or parport. All character

devices must have a unique identification string up to 127 characters

long. It is used to identify the device in other related directives.

For the complete description of all back-end's sub-options, see the

man page (man 1 qemu). A brief description of the

available back-ends follows:

nullCreates an empty device that outputs no data and drops any data it receives.

stdioConnects to QEMU's process standard input and standard output.

socketCreates a two-way stream socket. If PATH is specified, a Unix socket is created:

>sudoqemu-system-x86_64 [...] -chardev \ socket,id=unix_socket1,path=/tmp/unix_socket1,serverThe SERVER suboption specifies that the socket is a listening socket.

If PORT is specified, a TCP socket is created:

>sudoqemu-system-x86_64 [...] -chardev \ socket,id=tcp_socket1,host=localhost,port=7777,server,nowaitThe command creates a local listening (

server) TCP socket on port 7777. QEMU does not block waiting for a client to connect to the listening port (nowait).udpSends all network traffic from VM Guest to a remote host over the UDP protocol.

>sudoqemu-system-x86_64 [...] \ -chardev udp,id=udp_fwd,host=mercury.example.com,port=7777The command binds port 7777 on the remote host mercury.example.com and sends VM Guest network traffic there.

vcCreates a new QEMU text console. You can optionally specify the dimensions of the virtual console:

>sudoqemu-system-x86_64 [...] -chardev vc,id=vc1,width=640,height=480 \ -mon chardev=vc1The command creates a new virtual console called

vc1of the specified size, and connects the QEMU monitor to it.fileLogs all traffic from VM Guest to a file on VM Host Server. The

pathis required and is automatically created if it does not exist.>sudoqemu-system-x86_64 [...] \ -chardev file,id=qemu_log1,path=/var/log/qemu/guest1.log

By default QEMU creates a set of character devices for serial and parallel ports, and a special console for QEMU monitor. However, you can create your own character devices and use them for the mentioned purposes. The following options may help you:

-serial CHAR_DEVRedirects the VM Guest's virtual serial port to a character device CHAR_DEV on VM Host Server. By default, it is a virtual console (

vc) in graphical mode, andstdioin non-graphical mode. The-serialunderstands many sub-options. See the man pageman 1 qemufor a complete list of them.You can emulate up to four serial ports. Use

-serial noneto disable all serial ports.-parallel DEVICERedirects the VM Guest's parallel port to a DEVICE. This option supports the same devices as

-serial.Tip

With openSUSE Leap as a VM Host Server, you can directly use the hardware parallel port devices

/dev/parportNwhereNis the number of the port.You can emulate up to three parallel ports. Use

-parallel noneto disable all parallel ports.-monitor CHAR_DEVRedirects the QEMU monitor to a character device CHAR_DEV on VM Host Server. This option supports the same devices as

-serial. By default, it is a virtual console (vc) in a graphical mode, andstdioin non-graphical mode.

For a complete list of available character devices back-ends, see the

man page (man 1 qemu).

36.4 Networking in QEMU #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running-networking

Use the -netdev option in combination with

-device to define a specific type of networking and a

network interface card for your VM Guest. The syntax for the

-netdev option is

-netdev type[,prop[=value][,...]]

Currently, SUSE supports the following network types:

user, bridge, and

tap. For a complete list of -netdev

sub-options, see the man page (man 1 qemu).

Supported -netdev sub-options #

bridgeUses a specified network helper to configure the TAP interface and attach it to a specified bridge. For more information, see Section 36.4.3, “Bridged networking”.

userSpecifies user-mode networking. For more information, see Section 36.4.2, “User-mode networking”.

tapSpecifies bridged or routed networking. For more information, see Section 36.4.3, “Bridged networking”.

36.4.1 Defining a network interface card #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running-networking-nic

Use -netdev together with the related

-device option to add a new emulated network card:

>sudoqemu-system-x86_64 [...] \ -netdev tap1,id=hostnet0 \ -device virtio-net-pci2,netdev=hostnet0,vlan=13,\ macaddr=00:16:35:AF:94:4B4,name=ncard1

Specifies the network device type. | |

Specifies the model of the network card. Use

Currently, SUSE supports the models

| |

Connects the network interface to VLAN number 1. You can specify your own number—it is mainly useful for identification purpose. If you omit this suboption, QEMU uses the default 0. | |

Specifies the Media Access Control (MAC) address for the network card. It is a unique identifier and you are advised to always specify it. If not, QEMU supplies its own default MAC address and creates a possible MAC address conflict within the related VLAN. |

36.4.2 User-mode networking #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running-networking-usermode

The -netdev user option instructs QEMU to use

user-mode networking. This is the default if no networking mode is

selected. Therefore, these command lines are equivalent:

>sudoqemu-system-x86_64 -hda /images/sles_base.raw

>sudoqemu-system-x86_64 -hda /images/sles_base.raw -netdev user,id=hostnet0

This mode is useful to allow the VM Guest to access the external network resources, such as the Internet. By default, no incoming traffic is permitted and therefore, the VM Guest is not visible to other machines on the network. No administrator privileges are required in this networking mode. The user-mode is also useful for doing a network boot on your VM Guest from a local directory on VM Host Server.

The VM Guest allocates an IP address from a virtual DHCP server.

VM Host Server (the DHCP server) is reachable at 10.0.2.2, while the IP

address range for allocation starts from 10.0.2.15. You can use

ssh to connect to VM Host Server at 10.0.2.2, and

scp to copy files back and forth.

36.4.2.1 Command line examples #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running-networking-usermode-examples

This section shows several examples on how to set up user-mode networking with QEMU.

Example 36.1: Restricted user-mode networking #

>sudoqemu-system-x86_64 [...] \ -netdev user1,id=hostnet0 \ -device virtio-net-pci,netdev=hostnet0,vlan=12,name=user_net13,restrict=yes4

Specifies user-mode networking. | |

Connects to VLAN number 1. If omitted, defaults to 0. | |

Specifies a human-readable name of the network stack. Useful when identifying it in the QEMU monitor. | |

Isolates VM Guest. It then cannot communicate with VM Host Server and no network packets are routed to the external network. |

Example 36.2: User-mode networking with custom IP range #

>sudoqemu-system-x86_64 [...] \ -netdev user,id=hostnet0 \ -device virtio-net-pci,netdev=hostnet0,net=10.2.0.0/81,host=10.2.0.62,\ dhcpstart=10.2.0.203,hostname=tux_kvm_guest4

Specifies the IP address of the network that VM Guest sees and optionally the netmask. Default is 10.0.2.0/8. | |

Specifies the VM Host Server IP address that VM Guest sees. Default is 10.0.2.2. | |

Specifies the first of the 16 IP addresses that the built-in DHCP server can assign to VM Guest. Default is 10.0.2.15. | |

Specifies the host name that the built-in DHCP server assigns to VM Guest. |

Example 36.3: User-mode networking with network-boot and TFTP #

>sudoqemu-system-x86_64 [...] \ -netdev user,id=hostnet0 \ -device virtio-net-pci,netdev=hostnet0,tftp=/images/tftp_dir1,\ bootfile=/images/boot/pxelinux.02

Activates a built-in TFTP (a file transfer protocol with the functionality of a basic FTP) server. The files in the specified directory are visible to a VM Guest as the root of a TFTP server. | |

Broadcasts the specified file as a BOOTP (a network protocol

that offers an IP address and a network location of a boot

image, often used in diskless workstations) file. When used

together with |

Example 36.4: User-mode networking with host port forwarding #

>sudoqemu-system-x86_64 [...] \ -netdev user,id=hostnet0 \ -device virtio-net-pci,netdev=hostnet0,hostfwd=tcp::2222-:22

Forwards incoming TCP connections to the port 2222 on the host to

the port 22 (SSH) on

VM Guest. If sshd is

running on VM Guest, enter

> ssh qemu_host -p 2222

where qemu_host is the host name or IP address

of the host system, to get a

SSH prompt from VM Guest.

36.4.3 Bridged networking #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running-networking-bridge

With the -netdev tap option, QEMU creates a

network bridge by connecting the host TAP network device to a specified

VLAN of VM Guest. Its network interface is then visible to the rest of

the network. This method does not work by default and needs to be

explicitly specified.

First, create a network bridge and add a VM Host Server physical network

interface to it, such as eth0:

Start and select › .

Click and select from the drop-down box in the window. Click .

Choose whether you need a dynamically or statically assigned IP address, and fill the related network settings if applicable.

In the pane, select the Ethernet device to add to the bridge.

Click . When asked about adapting an already configured device, click .

Click to apply the changes. Check if the bridge is created:

>bridge link 2: eth0 state UP : <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 master br0 \ state forwarding priority 32 cost 100

36.4.3.1 Connecting to a bridge manually #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running-networking-bridge-manually

Use the following example script to connect VM Guest to the newly

created bridge interface br0. Several commands in

the script are run via the sudo mechanism because

they require root privileges.

Tip: Required software

To manage a network bridge, you need to have the tunctl package installed.

#!/bin/bash bridge=br01 tap=$(sudo tunctl -u $(whoami) -b)2 sudo ip link set $tap up3 sleep 1s4 sudo ip link add name $bridge type bridge sudo ip link set $bridge up sudo ip link set $tap master $bridge5 qemu-system-x86_64 -machine accel=kvm -m 512 -hda /images/sles_base.raw \ -netdev tap,id=hostnet0 \ -device virtio-net-pci,netdev=hostnet0,vlan=0,macaddr=00:16:35:AF:94:4B,\ ifname=$tap6,script=no7,downscript=no sudo ip link set $tap nomaster8 sudo ip link set $tap down9 sudo tunctl -d $tap10

Name of the bridge device. | |

Prepare a new TAP device and assign it to the user who runs the script. TAP devices are virtual network devices often used for virtualization and emulation setups. | |

Bring up the newly created TAP network interface. | |

Make a 1-second pause to make sure the new TAP network interface is really up. | |

Add the new | |

The | |

Before | |

Deletes the TAP interface from a network bridge

| |

Sets the state of the TAP device to | |

Tear down the TAP device. |

36.4.3.2 Connecting to a bridge with qemu-bridge-helper #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: qemu-bridge-helper

Another way to connect VM Guest to a network through a network

bridge is via the qemu-bridge-helper helper

program. It configures the TAP interface for you, and attaches it to

the specified bridge. The default helper executable is

/usr/lib/qemu-bridge-helper. The helper

executable is setuid root, which is only executable by the members of

the virtualization group (kvm). Therefore the

qemu-system-ARCH command itself does not need to

be run under root privileges.

The helper is automatically called when you specify a network bridge:

qemu-system-x86_64 [...] \ -netdev bridge,id=hostnet0,vlan=0,br=br0 \ -device virtio-net-pci,netdev=hostnet0

You can specify your own custom helper script that takes care of the

TAP device (de)configuration, with the

helper=/path/to/your/helper option:

qemu-system-x86_64 [...] \ -netdev bridge,id=hostnet0,vlan=0,br=br0,helper=/path/to/bridge-helper \ -device virtio-net-pci,netdev=hostnet0

Tip

To define access privileges to

qemu-bridge-helper, inspect the

/etc/qemu/bridge.conf file. For example, the

following directive

allow br0

allows the qemu-system-ARCH command to connect

its VM Guest to the network bridge br0.

36.5 Viewing a VM Guest with VNC #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running-vnc

By default QEMU uses a GTK (a cross-platform toolkit library) window to

display the graphical output of a VM Guest.

With the -vnc option specified, you can make QEMU

listen on a specified VNC display and redirect its graphical output to

the VNC session.

Tip

When working with QEMU's virtual machine via VNC session, it is

useful to work with the -usbdevice tablet option.

Moreover, if you need to use another keyboard layout than the default

en-us, specify it with the -k

option.

The first suboption of -vnc must be a

display value. The -vnc option

understands the following display specifications:

host:displayOnly connections from

hoston the display numberdisplayare accepted. The TCP port on which the VNC session is then running is normally a 5900 +displaynumber. If you do not specifyhost, connections are accepted from any host.unix:pathThe VNC server listens for connections on Unix domain sockets. The

pathoption specifies the location of the related Unix socket.noneThe VNC server functionality is initialized, but the server itself is not started. You can start the VNC server later with the QEMU monitor. For more information, see Chapter 37, Virtual machine administration using QEMU monitor.

Following the display value there may be one or more option flags separated by commas. Valid options are:

reverseConnect to a listening VNC client via a reverse connection.

websocketOpens an additional TCP listening port dedicated to VNC Websocket connections. By definition the Websocket port is 5700+display.

passwordRequire that password-based authentication is used for client connections.

tlsRequire that clients use TLS when communicating with the VNC server.

x509=/path/to/certificate/dirValid if TLS is specified. Require that x509 credentials are used for negotiating the TLS session.

x509verify=/path/to/certificate/dirValid if TLS is specified. Require that x509 credentials are used for negotiating the TLS session.

saslRequire that the client uses SASL to authenticate with the VNC server.

aclTurn on access control lists for checking of the x509 client certificate and SASL party.

lossyEnable lossy compression methods (gradient, JPEG, ...).

non-adaptiveDisable adaptive encodings. Adaptive encodings are enabled by default.

share=[allow-exclusive|force-shared|ignore]Set display sharing policy.

Note

For more details about the display options, see the qemu-doc man page.

An example VNC usage:

tux >sudoqemu-system-x86_64 [...] -vnc :5 # (on the client:)wilber >vncviewer venus:5 &

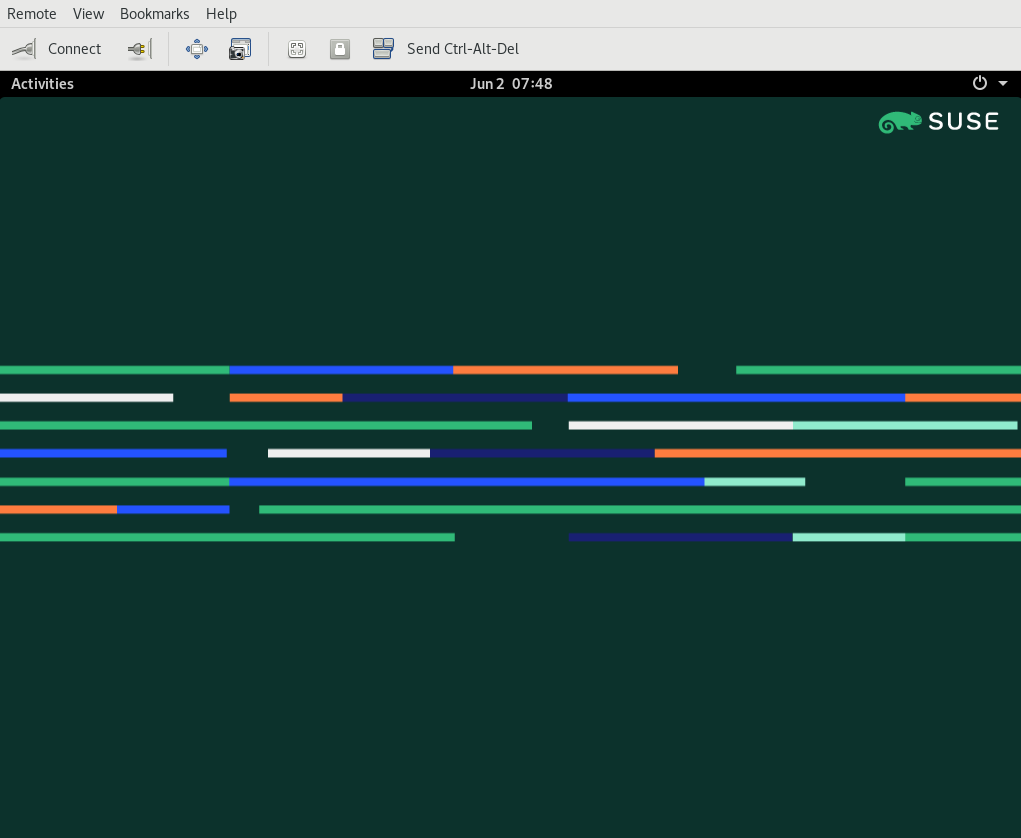

Figure 36.2: QEMU VNC session #

36.5.1 Secure VNC connections #Edit source

- File Name: qemu_running_vms_qemukvm.xml

- ID: cha-qemu-running-vnc-secure

The default VNC server setup does not use any form of authentication. In the previous example, any user can connect and view the QEMU VNC session from any host on the network.

There are several levels of security that you can apply to your VNC client/server connection. You can either protect your connection with a password, use x509 certificates, use SASL authentication, or even combine several authentication methods in one QEMU command.

For more information about configuring x509 certificates on a VM Host Server

and the client, see Section 11.3.2, “Remote TLS/SSL connection with x509 certificate (qemu+tls or xen+tls)”

and Section 11.3.2.3, “Configuring the client and testing the setup”.

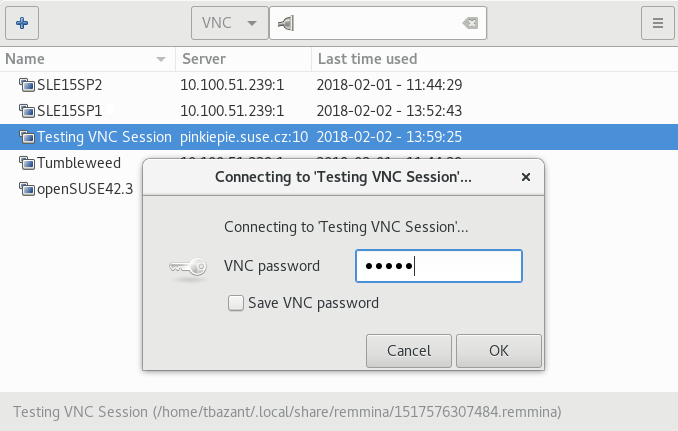

The Remmina VNC viewer supports advanced authentication mechanisms. For

this example, let us assume that the server x509 certificates

ca-cert.pem, server-cert.pem,

and server-key.pem are located in the

/etc/pki/qemu directory on the host. The client

certificates can be placed in any custom directory, as Remmina asks for

their path on the connection start-up.

Example 36.5: Password authentication #

qemu-system-x86_64 [...] -vnc :5,password -monitor stdio

Starts the VM Guest graphical output on VNC display number 5 which

corresponds to port 5905. The password suboption

initializes a simple password-based authentication method. There is

no password set by default and you need to set one with the

change vnc password command in QEMU monitor:

QEMU 2.3.1 monitor - type 'help' for more information (qemu) change vnc password Password: ****

You need the -monitor stdio option here, because

you would not be able to manage the QEMU monitor without

redirecting its input/output.

Figure 36.3: Authentication dialog in Remmina #

Example 36.6: x509 certificate authentication #

The QEMU VNC server can use TLS encryption for the session and x509 certificates for authentication. The server asks the client for a certificate and validates it against the CA certificate. Use this authentication type if your company provides an internal certificate authority.

qemu-system-x86_64 [...] -vnc :5,tls,x509verify=/etc/pki/qemu

Example 36.7: x509 certificate and password authentication #

You can combine the password authentication with TLS encryption and x509 certificate authentication to create a two-layer authentication model for clients. Remember to set the password in the QEMU monitor after you run the following command:

qemu-system-x86_64 [...] -vnc :5,password,tls,x509verify=/etc/pki/qemu \ -monitor stdio

Example 36.8: SASL authentication #

Simple Authentication and Security Layer (SASL) is a framework for authentication and data security in Internet protocols. It integrates several authentication mechanisms, like PAM, Kerberos, LDAP and more. SASL keeps its own user database, so the connecting user accounts do not need to exist on VM Host Server.

For security reasons, you are advised to combine SASL authentication with TLS encryption and x509 certificates:

qemu-system-x86_64 [...] -vnc :5,tls,x509,sasl -monitor stdio