5 Compute #

The OpenStack Compute service allows you to control an Infrastructure-as-a-Service (IaaS) cloud computing platform. It gives you control over instances and networks, and allows you to manage access to the cloud through users and projects.

Compute does not include virtualization software. Instead, it defines drivers that interact with underlying virtualization mechanisms that run on your host operating system, and exposes functionality over a web-based API.

5.1 System architecture #

OpenStack Compute contains several main components.

The cloud controller represents the global state and interacts with the other components. The

API serveracts as the web services front end for the cloud controller. Thecompute controllerprovides compute server resources and usually also contains the Compute service.The

object storeis an optional component that provides storage services; you can also use OpenStack Object Storage instead.An

auth managerprovides authentication and authorization services when used with the Compute system; you can also use OpenStack Identity as a separate authentication service instead.A

volume controllerprovides fast and permanent block-level storage for the compute servers.The

network controllerprovides virtual networks to enable compute servers to interact with each other and with the public network. You can also use OpenStack Networking instead.The

scheduleris used to select the most suitable compute controller to host an instance.

Compute uses a messaging-based, shared nothing architecture. All

major components exist on multiple servers, including the compute,

volume, and network controllers, and the Object Storage or Image service.

The state of the entire system is stored in a database. The cloud

controller communicates with the internal object store using HTTP, but

it communicates with the scheduler, network controller, and volume

controller using Advanced Message Queuing Protocol (AMQP). To avoid

blocking a component while waiting for a response, Compute uses

asynchronous calls, with a callback that is triggered when a response is

received.

5.1.1 Hypervisors #

Compute controls hypervisors through an API server. Selecting the best hypervisor to use can be difficult, and you must take budget, resource constraints, supported features, and required technical specifications into account. However, the majority of OpenStack development is done on systems using KVM and Xen-based hypervisors. For a detailed list of features and support across different hypervisors, see the Feature Support Matrix.

You can also orchestrate clouds using multiple hypervisors in different availability zones. Compute supports the following hypervisors:

For more information about hypervisors, see the Hypervisors section in the OpenStack Configuration Reference.

5.1.2 Projects, users, and roles #

The Compute system is designed to be used by different consumers in the form of projects on a shared system, and role-based access assignments. Roles control the actions that a user is allowed to perform.

Projects are isolated resource containers that form the principal

organizational structure within the Compute service. They consist of an

individual VLAN, and volumes, instances, images, keys, and users. A user

can specify the project by appending project_id to their access key.

If no project is specified in the API request, Compute attempts to use a

project with the same ID as the user.

For projects, you can use quota controls to limit the:

Number of volumes that can be launched.

Number of processor cores and the amount of RAM that can be allocated.

Floating IP addresses assigned to any instance when it launches. This allows instances to have the same publicly accessible IP addresses.

Fixed IP addresses assigned to the same instance when it launches. This allows instances to have the same publicly or privately accessible IP addresses.

Roles control the actions a user is allowed to perform. By default, most

actions do not require a particular role, but you can configure them by

editing the policy.json file for user roles. For example, a rule can

be defined so that a user must have the admin role in order to be

able to allocate a public IP address.

A project limits users' access to particular images. Each user is assigned a user name and password. Keypairs granting access to an instance are enabled for each user, but quotas are set, so that each project can control resource consumption across available hardware resources.

Earlier versions of OpenStack used the term tenant instead of

project. Because of this legacy terminology, some command-line tools

use --tenant_id where you would normally expect to enter a

project ID.

5.1.3 Block storage #

OpenStack provides two classes of block storage: ephemeral storage and persistent volume.

Ephemeral storage

Ephemeral storage includes a root ephemeral volume and an additional ephemeral volume.

The root disk is associated with an instance, and exists only for the life of this very instance. Generally, it is used to store an instance's root file system, persists across the guest operating system reboots, and is removed on an instance deletion. The amount of the root ephemeral volume is defined by the flavor of an instance.

In addition to the ephemeral root volume, all default types of flavors,

except m1.tiny, which is the smallest one, provide an additional

ephemeral block device sized between 20 and 160 GB (a configurable value

to suit an environment). It is represented as a raw block device with no

partition table or file system. A cloud-aware operating system can

discover, format, and mount such a storage device. OpenStack Compute

defines the default file system for different operating systems as Ext4

for Linux distributions, VFAT for non-Linux and non-Windows operating

systems, and NTFS for Windows. However, it is possible to specify any

other filesystem type by using virt_mkfs or

default_ephemeral_format configuration options.

For example, the cloud-init package included into an Ubuntu's stock

cloud image, by default, formats this space as an Ext4 file system

and mounts it on /mnt. This is a cloud-init feature, and is not

an OpenStack mechanism. OpenStack only provisions the raw storage.

Persistent volume

A persistent volume is represented by a persistent virtualized block device independent of any particular instance, and provided by OpenStack Block Storage.

Only a single configured instance can access a persistent volume. Multiple instances cannot access a persistent volume. This type of configuration requires a traditional network file system to allow multiple instances accessing the persistent volume. It also requires a traditional network file system like NFS, CIFS, or a cluster file system such as GlusterFS. These systems can be built within an OpenStack cluster, or provisioned outside of it, but OpenStack software does not provide these features.

You can configure a persistent volume as bootable and use it to provide a persistent virtual instance similar to the traditional non-cloud-based virtualization system. It is still possible for the resulting instance to keep ephemeral storage, depending on the flavor selected. In this case, the root file system can be on the persistent volume, and its state is maintained, even if the instance is shut down. For more information about this type of configuration, see Introduction to the Block Storage service in the OpenStack Configuration Reference.

A persistent volume does not provide concurrent access from multiple instances. That type of configuration requires a traditional network file system like NFS, or CIFS, or a cluster file system such as GlusterFS. These systems can be built within an OpenStack cluster, or provisioned outside of it, but OpenStack software does not provide these features.

5.1.4 EC2 compatibility API #

In addition to the native compute API, OpenStack provides an EC2-compatible API. This API allows EC2 legacy workflows built for EC2 to work with OpenStack.

Nova in tree EC2-compatible API is deprecated. The ec2-api project is working to implement the EC2 API.

You can use numerous third-party tools and language-specific SDKs to interact with OpenStack clouds. You can use both native and compatibility APIs. Some of the more popular third-party tools are:

- Euca2ools

A popular open source command-line tool for interacting with the EC2 API. This is convenient for multi-cloud environments where EC2 is the common API, or for transitioning from EC2-based clouds to OpenStack. For more information, see the Eucalyptus Documentation.

- Hybridfox

A Firefox browser add-on that provides a graphical interface to many popular public and private cloud technologies, including OpenStack. For more information, see the hybridfox site.

- boto

Python library for interacting with Amazon Web Services. You can use this library to access OpenStack through the EC2 compatibility API. For more information, see the boto project page on GitHub.

- fog

A Ruby cloud services library. It provides methods to interact with a large number of cloud and virtualization platforms, including OpenStack. For more information, see the fog site.

- php-opencloud

A PHP SDK designed to work with most OpenStack-based cloud deployments, as well as Rackspace public cloud. For more information, see the php-opencloud site.

5.1.5 Building blocks #

In OpenStack the base operating system is usually copied from an image stored in the OpenStack Image service. This is the most common case and results in an ephemeral instance that starts from a known template state and loses all accumulated states on virtual machine deletion. It is also possible to put an operating system on a persistent volume in the OpenStack Block Storage volume system. This gives a more traditional persistent system that accumulates states which are preserved on the OpenStack Block Storage volume across the deletion and re-creation of the virtual machine. To get a list of available images on your system, run:

$ openstack image list +--------------------------------------+-----------------------------+--------+ | ID | Name | Status | +--------------------------------------+-----------------------------+--------+ | aee1d242-730f-431f-88c1-87630c0f07ba | Ubuntu 14.04 cloudimg amd64 | active | | 0b27baa1-0ca6-49a7-b3f4-48388e440245 | Ubuntu 14.10 cloudimg amd64 | active | | df8d56fc-9cea-4dfd-a8d3-28764de3cb08 | jenkins | active | +--------------------------------------+-----------------------------+--------+

The displayed image attributes are:

-

ID Automatically generated UUID of the image

-

Name Free form, human-readable name for image

-

Status The status of the image. Images marked

ACTIVEare available for use.-

Server For images that are created as snapshots of running instances, this is the UUID of the instance the snapshot derives from. For uploaded images, this field is blank.

Virtual hardware templates are called flavors. The default

installation provides five flavors. By default, these are configurable

by admin users, however that behavior can be changed by redefining the

access controls for compute_extension:flavormanage in

/etc/nova/policy.json on the compute-api server.

For a list of flavors that are available on your system:

$ openstack flavor list +-----+-----------+-------+------+-----------+-------+-----------+ | ID | Name | RAM | Disk | Ephemeral | VCPUs | Is_Public | +-----+-----------+-------+------+-----------+-------+-----------+ | 1 | m1.tiny | 512 | 1 | 0 | 1 | True | | 2 | m1.small | 2048 | 20 | 0 | 1 | True | | 3 | m1.medium | 4096 | 40 | 0 | 2 | True | | 4 | m1.large | 8192 | 80 | 0 | 4 | True | | 5 | m1.xlarge | 16384 | 160 | 0 | 8 | True | +-----+-----------+-------+------+-----------+-------+-----------+

5.1.6 Compute service architecture #

These basic categories describe the service architecture and information about the cloud controller.

API server

At the heart of the cloud framework is an API server, which makes command and control of the hypervisor, storage, and networking programmatically available to users.

The API endpoints are basic HTTP web services which handle authentication, authorization, and basic command and control functions using various API interfaces under the Amazon, Rackspace, and related models. This enables API compatibility with multiple existing tool sets created for interaction with offerings from other vendors. This broad compatibility prevents vendor lock-in.

Message queue

A messaging queue brokers the interaction between compute nodes (processing), the networking controllers (software which controls network infrastructure), API endpoints, the scheduler (determines which physical hardware to allocate to a virtual resource), and similar components. Communication to and from the cloud controller is handled by HTTP requests through multiple API endpoints.

A typical message passing event begins with the API server receiving a request from a user. The API server authenticates the user and ensures that they are permitted to issue the subject command. The availability of objects implicated in the request is evaluated and, if available, the request is routed to the queuing engine for the relevant workers. Workers continually listen to the queue based on their role, and occasionally their type host name. When an applicable work request arrives on the queue, the worker takes assignment of the task and begins executing it. Upon completion, a response is dispatched to the queue which is received by the API server and relayed to the originating user. Database entries are queried, added, or removed as necessary during the process.

Compute worker

Compute workers manage computing instances on host machines. The API dispatches commands to compute workers to complete these tasks:

Run instances

Delete instances (Terminate instances)

Reboot instances

Attach volumes

Detach volumes

Get console output

Network Controller

The Network Controller manages the networking resources on host machines. The API server dispatches commands through the message queue, which are subsequently processed by Network Controllers. Specific operations include:

Allocating fixed IP addresses

Configuring VLANs for projects

Configuring networks for compute nodes

5.2 Images and instances #

Virtual machine images contain a virtual disk that holds a bootable operating system on it. Disk images provide templates for virtual machine file systems. The Image service controls image storage and management.

Instances are the individual virtual machines that run on physical compute nodes inside the cloud. Users can launch any number of instances from the same image. Each launched instance runs from a copy of the base image. Any changes made to the instance do not affect the base image. Snapshots capture the state of an instances running disk. Users can create a snapshot, and build a new image based on these snapshots. The Compute service controls instance, image, and snapshot storage and management.

When you launch an instance, you must choose a flavor, which

represents a set of virtual resources. Flavors define virtual

CPU number, RAM amount available, and ephemeral disks size. Users

must select from the set of available flavors

defined on their cloud. OpenStack provides a number of predefined

flavors that you can edit or add to.

For more information about creating and troubleshooting images, see the OpenStack Virtual Machine Image Guide.

For more information about image configuration options, see the Image services section of the OpenStack Configuration Reference.

For more information about flavors, see Section 5.4.3, “Flavors”.

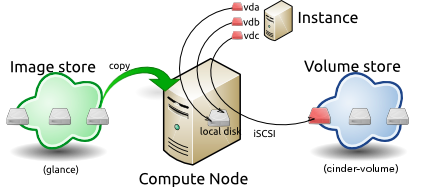

You can add and remove additional resources from running instances, such

as persistent volume storage, or public IP addresses. The example used

in this chapter is of a typical virtual system within an OpenStack

cloud. It uses the cinder-volume service, which provides persistent

block storage, instead of the ephemeral storage provided by the selected

instance flavor.

This diagram shows the system state prior to launching an instance. The

image store has a number of predefined images, supported by the Image

service. Inside the cloud, a compute node contains the

available vCPU, memory, and local disk resources. Additionally, the

cinder-volume service stores predefined volumes.

The base image state with no running instances

5.2.1 Instance Launch #

To launch an instance, select an image, flavor, and any optional

attributes. The selected flavor provides a root volume, labeled vda

in this diagram, and additional ephemeral storage, labeled vdb. In

this example, the cinder-volume store is mapped to the third virtual

disk on this instance, vdc.

Instance creation from an image

The Image service copies the base image from the image store to the

local disk. The local disk is the first disk that the instance

accesses, which is the root volume labeled vda. Smaller

instances start faster. Less data needs to be copied across

the network.

The new empty ephemeral disk is also created, labeled vdb.

This disk is deleted when you delete the instance.

The compute node connects to the attached cinder-volume using iSCSI. The

cinder-volume is mapped to the third disk, labeled vdc in this

diagram. After the compute node provisions the vCPU and memory

resources, the instance boots up from root volume vda. The instance

runs and changes data on the disks (highlighted in red on the diagram).

If the volume store is located on a separate network, the

my_block_storage_ip option specified in the storage node

configuration file directs image traffic to the compute node.

Some details in this example scenario might be different in your

environment. For example, you might use a different type of back-end

storage, or different network protocols. One common variant is that

the ephemeral storage used for volumes vda and vdb could be

backed by network storage rather than a local disk.

When you delete an instance, the state is reclaimed with the exception of the persistent volume. The ephemeral storage, whether encrypted or not, is purged. Memory and vCPU resources are released. The image remains unchanged throughout this process.

5.2.2 Image properties and property protection #

An image property is a key and value pair that the administrator or the image owner attaches to an OpenStack Image service image, as follows:

The administrator defines core properties, such as the image name.

The administrator and the image owner can define additional properties, such as licensing and billing information.

The administrator can configure any property as protected, which limits which policies or user roles can perform CRUD operations on that property. Protected properties are generally additional properties to which only administrators have access.

For unprotected image properties, the administrator can manage core properties and the image owner can manage additional properties.

To configure property protection

To configure property protection, edit the policy.json file. This file

can also be used to set policies for Image service actions.

Define roles or policies in the

policy.jsonfile:{ "context_is_admin": "role:admin", "default": "", "add_image": "", "delete_image": "", "get_image": "", "get_images": "", "modify_image": "", "publicize_image": "role:admin", "copy_from": "", "download_image": "", "upload_image": "", "delete_image_location": "", "get_image_location": "", "set_image_location": "", "add_member": "", "delete_member": "", "get_member": "", "get_members": "", "modify_member": "", "manage_image_cache": "role:admin", "get_task": "", "get_tasks": "", "add_task": "", "modify_task": "", "deactivate": "", "reactivate": "", "get_metadef_namespace": "", "get_metadef_namespaces":"", "modify_metadef_namespace":"", "add_metadef_namespace":"", "delete_metadef_namespace":"", "get_metadef_object":"", "get_metadef_objects":"", "modify_metadef_object":"", "add_metadef_object":"", "list_metadef_resource_types":"", "get_metadef_resource_type":"", "add_metadef_resource_type_association":"", "get_metadef_property":"", "get_metadef_properties":"", "modify_metadef_property":"", "add_metadef_property":"", "get_metadef_tag":"", "get_metadef_tags":"", "modify_metadef_tag":"", "add_metadef_tag":"", "add_metadef_tags":"" }For each parameter, use

"rule:restricted"to restrict access to all users or"role:admin"to limit access to administrator roles. For example:"download_image": "upload_image":

Define which roles or policies can manage which properties in a property protections configuration file. For example:

[x_none_read] create = context_is_admin read = ! update = ! delete = ! [x_none_update] create = context_is_admin read = context_is_admin update = ! delete = context_is_admin [x_none_delete] create = context_is_admin read = context_is_admin update = context_is_admin delete = !

A value of

@allows the corresponding operation for a property.A value of

!disallows the corresponding operation for a property.

In the

glance-api.conffile, define the location of a property protections configuration file.property_protection_file = {file_name}This file contains the rules for property protections and the roles and policies associated with it.

By default, property protections are not enforced.

If you specify a file name value and the file is not found, the

glance-apiservice does not start.To view a sample configuration file, see glance-api.conf.

Optionally, in the

glance-api.conffile, specify whether roles or policies are used in the property protections configuration fileproperty_protection_rule_format = roles

The default is

roles.To view a sample configuration file, see glance-api.conf.

5.2.3 Image download: how it works #

Prior to starting a virtual machine, transfer the virtual machine image to the compute node from the Image service. How this works can change depending on the settings chosen for the compute node and the Image service.

Typically, the Compute service will use the image identifier passed to it by the scheduler service and request the image from the Image API. Though images are not stored in glance—rather in a back end, which could be Object Storage, a filesystem or any other supported method—the connection is made from the compute node to the Image service and the image is transferred over this connection. The Image service streams the image from the back end to the compute node.

It is possible to set up the Object Storage node on a separate network,

and still allow image traffic to flow between the compute and object

storage nodes. Configure the my_block_storage_ip option in the

storage node configuration file to allow block storage traffic to reach

the compute node.

Certain back ends support a more direct method, where on request the

Image service will return a URL that links directly to the back-end store.

You can download the image using this approach. Currently, the only store

to support the direct download approach is the filesystem store.

Configured the approach using the filesystems option in

the image_file_url section of the nova.conf file on

compute nodes.

Compute nodes also implement caching of images, meaning that if an image has been used before it won't necessarily be downloaded every time. Information on the configuration options for caching on compute nodes can be found in the Configuration Reference.

5.2.4 Instance building blocks #

In OpenStack, the base operating system is usually copied from an image stored in the OpenStack Image service. This results in an ephemeral instance that starts from a known template state and loses all accumulated states on shutdown.

You can also put an operating system on a persistent volume in Compute or the Block Storage volume system. This gives a more traditional, persistent system that accumulates states that are preserved across restarts. To get a list of available images on your system, run:

$ openstack image list +--------------------------------------+-----------------------------+--------+ | ID | Name | Status | +--------------------------------------+-----------------------------+--------+ | aee1d242-730f-431f-88c1-87630c0f07ba | Ubuntu 14.04 cloudimg amd64 | active | +--------------------------------------+-----------------------------+--------+ | 0b27baa1-0ca6-49a7-b3f4-48388e440245 | Ubuntu 14.10 cloudimg amd64 | active | +--------------------------------------+-----------------------------+--------+ | df8d56fc-9cea-4dfd-a8d3-28764de3cb08 | jenkins | active | +--------------------------------------+-----------------------------+--------+

The displayed image attributes are:

-

ID Automatically generated UUID of the image.

-

Name Free form, human-readable name for the image.

-

Status The status of the image. Images marked

ACTIVEare available for use.-

Server For images that are created as snapshots of running instances, this is the UUID of the instance the snapshot derives from. For uploaded images, this field is blank.

Virtual hardware templates are called flavors. The default

installation provides five predefined flavors.

For a list of flavors that are available on your system, run:

$ openstack flavor list +-----+-----------+-------+------+-----------+-------+-----------+ | ID | Name | RAM | Disk | Ephemeral | VCPUs | Is_Public | +-----+-----------+-------+------+-----------+-------+-----------+ | 1 | m1.tiny | 512 | 1 | 0 | 1 | True | | 2 | m1.small | 2048 | 20 | 0 | 1 | True | | 3 | m1.medium | 4096 | 40 | 0 | 2 | True | | 4 | m1.large | 8192 | 80 | 0 | 4 | True | | 5 | m1.xlarge | 16384 | 160 | 0 | 8 | True | +-----+-----------+-------+------+-----------+-------+-----------+

By default, administrative users can configure the flavors. You can

change this behavior by redefining the access controls for

compute_extension:flavormanage in /etc/nova/policy.json on the

compute-api server.

5.2.5 Instance management tools #

OpenStack provides command-line, web interface, and API-based instance management tools. Third-party management tools are also available, using either the native API or the provided EC2-compatible API.

The OpenStack python-novaclient package provides a basic command-line

utility, which uses the nova command. This is available as a native

package for most Linux distributions, or you can install the latest

version using the pip python package installer:

# pip install python-novaclient

For more information about python-novaclient and other command-line tools, see the OpenStack End User Guide.

5.2.6 Control where instances run #

The Scheduling section of OpenStack Configuration Reference provides detailed information on controlling where your instances run, including ensuring a set of instances run on different compute nodes for service resiliency or on the same node for high performance inter-instance communications.

Administrative users can specify which compute node their instances

run on. To do this, specify the --availability-zone

AVAILABILITY_ZONE:COMPUTE_HOST parameter.

5.2.7 Launch instances with UEFI #

Unified Extensible Firmware Interface (UEFI) is a standard firmware designed to replace legacy BIOS. There is a slow but steady trend for operating systems to move to the UEFI format and, in some cases, make it their only format.

To configure UEFI environment

To successfully launch an instance from an UEFI image in QEMU/KVM environment, the administrator has to install the following packages on compute node:

OVMF, a port of Intel's tianocore firmware to QEMU virtual machine.

libvirt, which has been supporting UEFI boot since version 1.2.9.

Because default UEFI loader path is /usr/share/OVMF/OVMF_CODE.fd, the

administrator must create one link to this location after UEFI package

is installed.

To upload UEFI images

To launch instances from a UEFI image, the administrator first has to

upload one UEFI image. To do so, hw_firmware_type property must

be set to uefi when the image is created. For example:

$ openstack image create --container-format bare --disk-format qcow2 \ --property hw_firmware_type=uefi --file /tmp/cloud-uefi.qcow --name uefi

After that, you can launch instances from this UEFI image.

5.3 Networking with nova-network #

Understanding the networking configuration options helps you design the best configuration for your Compute instances.

You can choose to either install and configure nova-network or use the

OpenStack Networking service (neutron). This section contains a brief

overview of nova-network. For more information about OpenStack

Networking, see Chapter 9, Networking.

5.3.1 Networking concepts #

Compute assigns a private IP address to each VM instance. Compute makes a distinction between fixed IPs and floating IP. Fixed IPs are IP addresses that are assigned to an instance on creation and stay the same until the instance is explicitly terminated. Floating IPs are addresses that can be dynamically associated with an instance. A floating IP address can be disassociated and associated with another instance at any time. A user can reserve a floating IP for their project.

Currently, Compute with nova-network only supports Linux bridge

networking that allows virtual interfaces to connect to the outside

network through the physical interface.

The network controller with nova-network provides virtual networks to

enable compute servers to interact with each other and with the public

network. Compute with nova-network supports the following network modes,

which are implemented as Network Manager types:

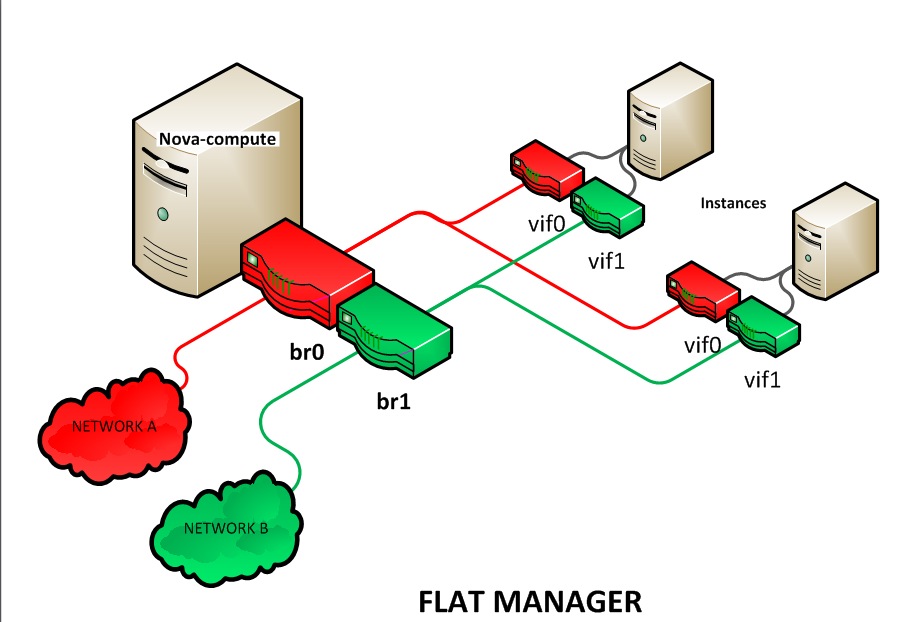

- Flat Network Manager

In this mode, a network administrator specifies a subnet. IP addresses for VM instances are assigned from the subnet, and then injected into the image on launch. Each instance receives a fixed IP address from the pool of available addresses. A system administrator must create the Linux networking bridge (typically named

br100, although this is configurable) on the systems running thenova-networkservice. All instances of the system are attached to the same bridge, which is configured manually by the network administrator.

Configuration injection currently only works on Linux-style

systems that keep networking configuration in

/etc/network/interfaces.

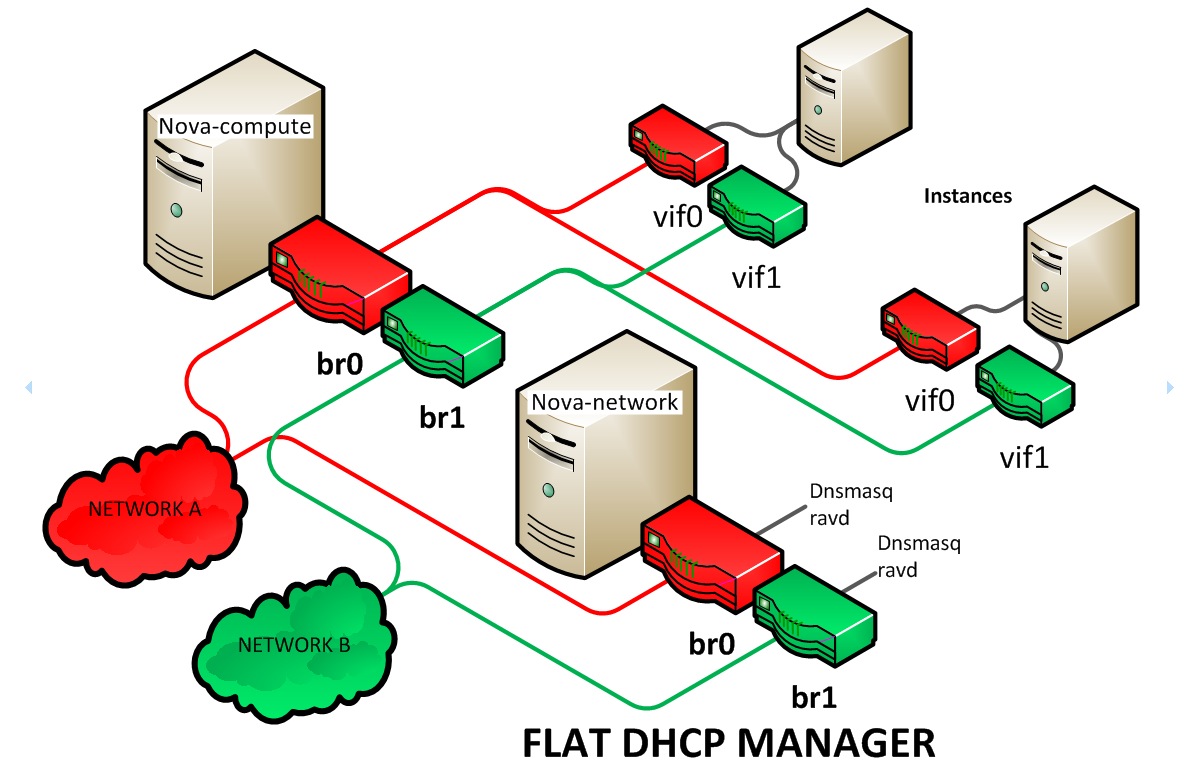

- Flat DHCP Network Manager

In this mode, OpenStack starts a DHCP server (dnsmasq) to allocate IP addresses to VM instances from the specified subnet, in addition to manually configuring the networking bridge. IP addresses for VM instances are assigned from a subnet specified by the network administrator.

Like flat mode, all instances are attached to a single bridge on the compute node. Additionally, a DHCP server configures instances depending on single-/multi-host mode, alongside each

nova-network. In this mode, Compute does a bit more configuration. It attempts to bridge into an Ethernet device (flat_interface, eth0 by default). For every instance, Compute allocates a fixed IP address and configures dnsmasq with the MAC ID and IP address for the VM. Dnsmasq does not take part in the IP address allocation process, it only hands out IPs according to the mapping done by Compute. Instances receive their fixed IPs with thedhcpdiscovercommand. These IPs are not assigned to any of the host's network interfaces, only to the guest-side interface for the VM.In any setup with flat networking, the hosts providing the

nova-networkservice are responsible for forwarding traffic from the private network. They also run and configure dnsmasq as a DHCP server listening on this bridge, usually on IP address 10.0.0.1 (see Section 5.3.2, “DHCP server: dnsmasq”). Compute can determine the NAT entries for each network, although sometimes NAT is not used, such as when the network has been configured with all public IPs, or if a hardware router is used (which is a high availability option). In this case, hosts need to havebr100configured and physically connected to any other nodes that are hosting VMs. You must set theflat_network_bridgeoption or create networks with the bridge parameter in order to avoid raising an error. Compute nodes have iptables or ebtables entries created for each project and instance to protect against MAC ID or IP address spoofing and ARP poisoning.

In single-host Flat DHCP mode you will be able to ping VMs

through their fixed IP from the nova-network node, but you

cannot ping them from the compute nodes. This is expected

behavior.

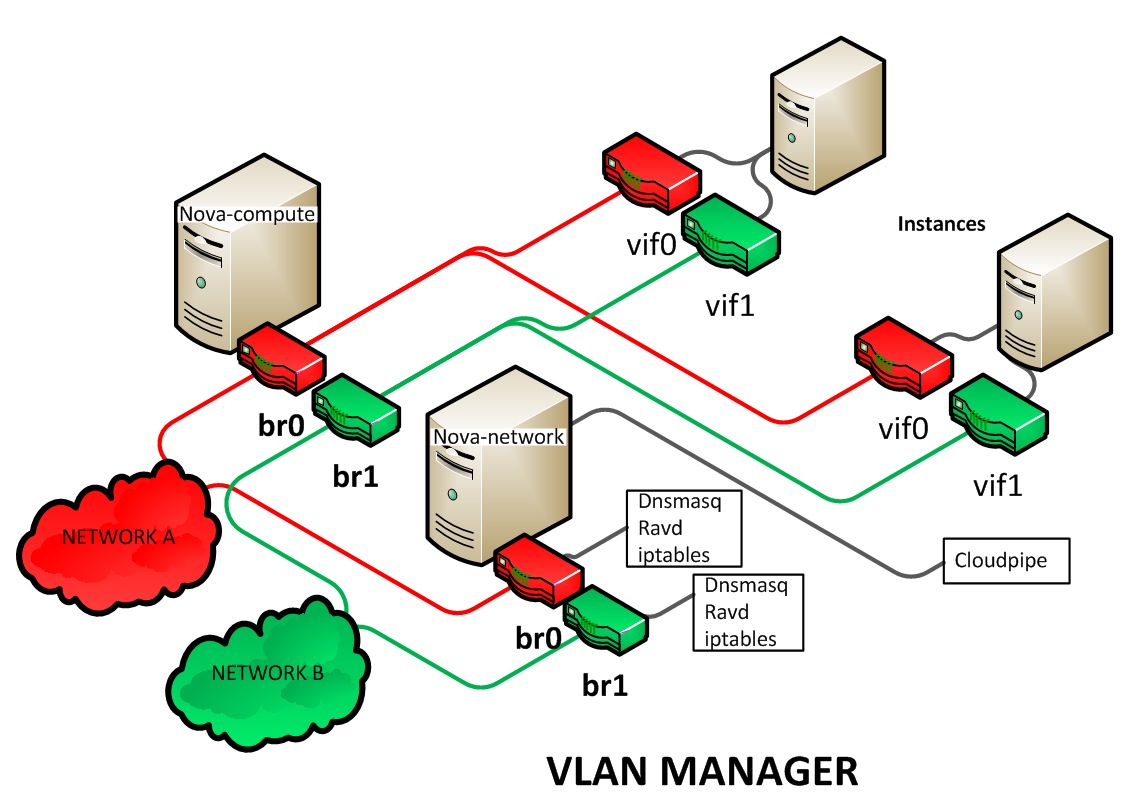

- VLAN Network Manager

This is the default mode for OpenStack Compute. In this mode, Compute creates a VLAN and bridge for each project. For multiple-machine installations, the VLAN Network Mode requires a switch that supports VLAN tagging (IEEE 802.1Q). The project gets a range of private IPs that are only accessible from inside the VLAN. In order for a user to access the instances in their project, a special VPN instance (code named

cloudpipe) needs to be created. Compute generates a certificate and key for the user to access the VPN and starts the VPN automatically. It provides a private network segment for each project's instances that can be accessed through a dedicated VPN connection from the internet. In this mode, each project gets its own VLAN, Linux networking bridge, and subnet.The subnets are specified by the network administrator, and are assigned dynamically to a project when required. A DHCP server is started for each VLAN to pass out IP addresses to VM instances from the subnet assigned to the project. All instances belonging to one project are bridged into the same VLAN for that project. OpenStack Compute creates the Linux networking bridges and VLANs when required.

These network managers can co-exist in a cloud system. However, because you cannot select the type of network for a given project, you cannot configure multiple network types in a single Compute installation.

All network managers configure the network using network drivers. For

example, the Linux L3 driver (l3.py and linux_net.py), which

makes use of iptables, route and other network management

facilities, and the libvirt network filtering

facilities. The driver is

not tied to any particular network manager; all network managers use the

same driver. The driver usually initializes only when the first VM lands

on this host node.

All network managers operate in either single-host or multi-host mode.

This choice greatly influences the network configuration. In single-host

mode, a single nova-network service provides a default gateway for VMs

and hosts a single DHCP server (dnsmasq). In multi-host mode, each

compute node runs its own nova-network service. In both cases, all

traffic between VMs and the internet flows through nova-network. Each

mode has benefits and drawbacks. For more on this, see the Network

Topology section in the OpenStack Operations Guide.

All networking options require network connectivity to be already set up

between OpenStack physical nodes. OpenStack does not configure any

physical network interfaces. All network managers automatically create

VM virtual interfaces. Some network managers can also create network

bridges such as br100.

The internal network interface is used for communication with VMs. The

interface should not have an IP address attached to it before OpenStack

installation, it serves only as a fabric where the actual endpoints are

VMs and dnsmasq. Additionally, the internal network interface must be in

promiscuous mode, so that it can receive packets whose target MAC

address is the guest VM, not the host.

All machines must have a public and internal network interface

(controlled by these options: public_interface for the public

interface, and flat_interface and vlan_interface for the

internal interface with flat or VLAN managers). This guide refers to the

public network as the external network and the private network as the

internal or project network.

For flat and flat DHCP modes, use the nova network-create command

to create a network:

$ nova network-create vmnet \ --fixed-range-v4 10.0.0.0/16 --fixed-cidr 10.0.20.0/24 --bridge br100

- This example uses the following parameters:

-

--fixed-range-v4 specifies the network subnet.

-

--fixed-cidr specifies a range of fixed IP addresses to allocate, and can be a subset of the

--fixed-range-v4argument.-

--bridge specifies the bridge device to which this network is connected on every compute node.

-

5.3.2 DHCP server: dnsmasq #

The Compute service uses

dnsmasq as the DHCP

server when using either Flat DHCP Network Manager or VLAN Network

Manager. For Compute to operate in IPv4/IPv6 dual-stack mode, use at

least dnsmasq v2.63. The nova-network service is responsible for

starting dnsmasq processes.

The behavior of dnsmasq can be customized by creating a dnsmasq

configuration file. Specify the configuration file using the

dnsmasq_config_file configuration option:

dnsmasq_config_file=/etc/dnsmasq-nova.confFor more information about creating a dnsmasq configuration file, see the OpenStack Configuration Reference, and the dnsmasq documentation.

Dnsmasq also acts as a caching DNS server for instances. You can specify

the DNS server that dnsmasq uses by setting the dns_server

configuration option in /etc/nova/nova.conf. This example configures

dnsmasq to use Google's public DNS server:

dns_server=8.8.8.8Dnsmasq logs to syslog (typically /var/log/syslog or

/var/log/messages, depending on Linux distribution). Logs can be

useful for troubleshooting, especially in a situation where VM instances

boot successfully but are not reachable over the network.

Administrators can specify the starting point IP address to reserve with the DHCP server (in the format n.n.n.n) with this command:

$ nova-manage fixed reserve --address IP_ADDRESS

This reservation only affects which IP address the VMs start at, not the

fixed IP addresses that nova-network places on the bridges.

5.3.3 Configure Compute to use IPv6 addresses #

If you are using OpenStack Compute with nova-network, you can put

Compute into dual-stack mode, so that it uses both IPv4 and IPv6

addresses for communication. In dual-stack mode, instances can acquire

their IPv6 global unicast addresses by using a stateless address

auto-configuration mechanism [RFC 4862/2462]. IPv4/IPv6 dual-stack mode

works with both VlanManager and FlatDHCPManager networking

modes.

In VlanManager networking mode, each project uses a different 64-bit

global routing prefix. In FlatDHCPManager mode, all instances use

one 64-bit global routing prefix.

This configuration was tested with virtual machine images that have an

IPv6 stateless address auto-configuration capability. This capability is

required for any VM to run with an IPv6 address. You must use an EUI-64

address for stateless address auto-configuration. Each node that

executes a nova-* service must have python-netaddr and radvd

installed.

Switch into IPv4/IPv6 dual-stack mode

For every node running a

nova-*service, install python-netaddr:# apt-get install python-netaddr

For every node running

nova-network, installradvdand configure IPv6 networking:# apt-get install radvd # echo 1 > /proc/sys/net/ipv6/conf/all/forwarding # echo 0 > /proc/sys/net/ipv6/conf/all/accept_ra

On all nodes, edit the

nova.conffile and specifyuse_ipv6 = True.Restart all

nova-*services.

IPv6 configuration options

You can use the following options with the nova network-create

command:

Add a fixed range for IPv6 addresses to the

nova network-createcommand. Specifypublicorprivateafter thenetwork-createparameter.$ nova network-create public --fixed-range-v4 FIXED_RANGE_V4 \ --vlan VLAN_ID --vpn VPN_START --fixed-range-v6 FIXED_RANGE_V6

Set the IPv6 global routing prefix by using the

--fixed_range_v6parameter. The default value for the parameter isfd00::/48.When you use

FlatDHCPManager, the command uses the original--fixed_range_v6value. For example:$ nova network-create public --fixed-range-v4 10.0.2.0/24 \ --fixed-range-v6 fd00:1::/48

When you use

VlanManager, the command increments the subnet ID to create subnet prefixes. Guest VMs use this prefix to generate their IPv6 global unicast addresses. For example:$ nova network-create public --fixed-range-v4 10.0.1.0/24 --vlan 100 \ --vpn 1000 --fixed-range-v6 fd00:1::/48

|

Configuration option = Default value |

Description |

|---|---|

|

[DEFAULT] | |

|

fixed_range_v6 = fd00::/48 |

(StrOpt) Fixed IPv6 address block |

|

gateway_v6 = None |

(StrOpt) Default IPv6 gateway |

|

ipv6_backend = rfc2462 |

(StrOpt) Backend to use for IPv6 generation |

|

use_ipv6 = False |

(BoolOpt) Use IPv6 |

5.3.4 Metadata service #

Compute uses a metadata service for virtual machine instances to

retrieve instance-specific data. Instances access the metadata service

at http://169.254.169.254. The metadata service supports two sets of

APIs: an OpenStack metadata API and an EC2-compatible API. Both APIs are

versioned by date.

To retrieve a list of supported versions for the OpenStack metadata API,

make a GET request to http://169.254.169.254/openstack:

$ curl http://169.254.169.254/openstack 2012-08-10 2013-04-04 2013-10-17 latest

To list supported versions for the EC2-compatible metadata API, make a

GET request to http://169.254.169.254:

$ curl http://169.254.169.254 1.0 2007-01-19 2007-03-01 2007-08-29 2007-10-10 2007-12-15 2008-02-01 2008-09-01 2009-04-04 latest

If you write a consumer for one of these APIs, always attempt to access the most recent API version supported by your consumer first, then fall back to an earlier version if the most recent one is not available.

Metadata from the OpenStack API is distributed in JSON format. To

retrieve the metadata, make a GET request to

http://169.254.169.254/openstack/2012-08-10/meta_data.json:

$ curl http://169.254.169.254/openstack/2012-08-10/meta_data.json

{

"uuid": "d8e02d56-2648-49a3-bf97-6be8f1204f38",

"availability_zone": "nova",

"hostname": "test.novalocal",

"launch_index": 0,

"meta": {

"priority": "low",

"role": "webserver"

},

"project_id": "f7ac731cc11f40efbc03a9f9e1d1d21f",

"public_keys": {

"mykey": "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAAAgQDYVEprvtYJXVOBN0XNKV\

VRNCRX6BlnNbI+USLGais1sUWPwtSg7z9K9vhbYAPUZcq8c/s5S9dg5vTH\

bsiyPCIDOKyeHba4MUJq8Oh5b2i71/3BISpyxTBH/uZDHdslW2a+SrPDCe\

uMMoss9NFhBdKtDkdG9zyi0ibmCP6yMdEX8Q== Generated by Nova\n"

},

"name": "test"

}Instances also retrieve user data (passed as the user_data parameter

in the API call or by the --user_data flag in the

openstack server create command) through the metadata service, by making a

GET request to http://169.254.169.254/openstack/2012-08-10/user_data:

$ curl http://169.254.169.254/openstack/2012-08-10/user_data #!/bin/bash echo 'Extra user data here'

The metadata service has an API that is compatible with version 2009-04-04 of the Amazon EC2 metadata service. This means that virtual machine images designed for EC2 will work properly with OpenStack.

The EC2 API exposes a separate URL for each metadata element. Retrieve a

listing of these elements by making a GET query to

http://169.254.169.254/2009-04-04/meta-data/:

$ curl http://169.254.169.254/2009-04-04/meta-data/ ami-id ami-launch-index ami-manifest-path block-device-mapping/ hostname instance-action instance-id instance-type kernel-id local-hostname local-ipv4 placement/ public-hostname public-ipv4 public-keys/ ramdisk-id reservation-id security-groups

$ curl http://169.254.169.254/2009-04-04/meta-data/block-device-mapping/ ami

$ curl http://169.254.169.254/2009-04-04/meta-data/placement/ availability-zone

$ curl http://169.254.169.254/2009-04-04/meta-data/public-keys/ 0=mykey

Instances can retrieve the public SSH key (identified by keypair name

when a user requests a new instance) by making a GET request to

http://169.254.169.254/2009-04-04/meta-data/public-keys/0/openssh-key:

$ curl http://169.254.169.254/2009-04-04/meta-data/public-keys/0/openssh-key ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAAAgQDYVEprvtYJXVOBN0XNKVVRNCRX6BlnNbI+US\ LGais1sUWPwtSg7z9K9vhbYAPUZcq8c/s5S9dg5vTHbsiyPCIDOKyeHba4MUJq8Oh5b2i71/3B\ ISpyxTBH/uZDHdslW2a+SrPDCeuMMoss9NFhBdKtDkdG9zyi0ibmCP6yMdEX8Q== Generated\ by Nova

Instances can retrieve user data by making a GET request to

http://169.254.169.254/2009-04-04/user-data:

$ curl http://169.254.169.254/2009-04-04/user-data #!/bin/bash echo 'Extra user data here'

The metadata service is implemented by either the nova-api service or

the nova-api-metadata service. Note that the nova-api-metadata service

is generally only used when running in multi-host mode, as it retrieves

instance-specific metadata. If you are running the nova-api service, you

must have metadata as one of the elements listed in the

enabled_apis configuration option in /etc/nova/nova.conf. The

default enabled_apis configuration setting includes the metadata

service, so you do not need to modify it.

Hosts access the service at 169.254.169.254:80, and this is

translated to metadata_host:metadata_port by an iptables rule

established by the nova-network service. In multi-host mode, you can set

metadata_host to 127.0.0.1.

For instances to reach the metadata service, the nova-network service

must configure iptables to NAT port 80 of the 169.254.169.254

address to the IP address specified in metadata_host (this defaults

to $my_ip, which is the IP address of the nova-network service) and

port specified in metadata_port (which defaults to 8775) in

/etc/nova/nova.conf.

The metadata_host configuration option must be an IP address,

not a host name.

The default Compute service settings assume that nova-network and

nova-api are running on the same host. If this is not the case, in the

/etc/nova/nova.conf file on the host running nova-network, set the

metadata_host configuration option to the IP address of the host

where nova-api is running.

|

Configuration option = Default value |

Description |

|---|---|

|

[DEFAULT] | |

|

metadata_cache_expiration = 15 |

(IntOpt) Time in seconds to cache metadata; 0 to disable metadata caching entirely (not recommended). Increasing this should improve response times of the metadata API when under heavy load. Higher values may increase memory usage and result in longer times for host metadata changes to take effect. |

|

metadata_host = $my_ip |

(StrOpt) The IP address for the metadata API server |

|

metadata_listen = 0.0.0.0 |

(StrOpt) The IP address on which the metadata API will listen. |

|

metadata_listen_port = 8775 |

(IntOpt) The port on which the metadata API will listen. |

|

metadata_manager = nova.api.manager.MetadataManager |

(StrOpt) OpenStack metadata service manager |

|

metadata_port = 8775 |

(IntOpt) The port for the metadata API port |

|

metadata_workers = None |

(IntOpt) Number of workers for metadata service. The default will be the number of CPUs available. |

|

vendordata_driver = nova.api.metadata.vendordata_json.JsonFileVendorData |

(StrOpt) Driver to use for vendor data |

|

vendordata_jsonfile_path = None |

(StrOpt) File to load JSON formatted vendor data from |

5.3.5 Enable ping and SSH on VMs #

You need to enable ping and ssh on your VMs for network access.

This can be done with either the nova or euca2ools

commands.

Run these commands as root only if the credentials used to interact

with nova-api are in /root/.bashrc. If the EC2 credentials in

the .bashrc file are for an unprivileged user, you must run

these commands as that user instead.

Enable ping and SSH with openstack security group rule create

commands:

$ openstack security group rule create default --protocol icmp --dst-port -1:-1 --remote-ip 0.0.0.0/0 $ openstack security group rule create default --protocol tcp --dst-port 22:22 --remote-ip 0.0.0.0/0

Enable ping and SSH with euca2ools:

$ euca-authorize -P icmp -t -1:-1 -s 0.0.0.0/0 default $ euca-authorize -P tcp -p 22 -s 0.0.0.0/0 default

If you have run these commands and still cannot ping or SSH your

instances, check the number of running dnsmasq processes, there

should be two. If not, kill the processes and restart the service with

these commands:

# killall dnsmasq # service nova-network restart

5.3.6 Configure public (floating) IP addresses #

This section describes how to configure floating IP addresses with

nova-network. For information about doing this with OpenStack

Networking, see Section 9.9.2, “L3 routing and NAT”.

5.3.6.1 Private and public IP addresses #

In this section, the term floating IP address is used to refer to an IP address, usually public, that you can dynamically add to a running virtual instance.

Every virtual instance is automatically assigned a private IP address. You can choose to assign a public (or floating) IP address instead. OpenStack Compute uses network address translation (NAT) to assign floating IPs to virtual instances.

To be able to assign a floating IP address, edit the

/etc/nova/nova.conf file to specify which interface the

nova-network service should bind public IP addresses to:

public_interface=VLAN100If you make changes to the /etc/nova/nova.conf file while the

nova-network service is running, you will need to restart the service to

pick up the changes.

Floating IPs are implemented by using a source NAT (SNAT rule in iptables), so security groups can sometimes display inconsistent behavior if VMs use their floating IP to communicate with other VMs, particularly on the same physical host. Traffic from VM to VM across the fixed network does not have this issue, and so this is the recommended setup. To ensure that traffic does not get SNATed to the floating range, explicitly set:

dmz_cidr=x.x.x.x/yThe x.x.x.x/y value specifies the range of floating IPs for each

pool of floating IPs that you define. This configuration is also

required if the VMs in the source group have floating IPs.

5.3.6.2 Enable IP forwarding #

IP forwarding is disabled by default on most Linux distributions. You will need to enable it in order to use floating IPs.

IP forwarding only needs to be enabled on the nodes that run

nova-network. However, you will need to enable it on all compute

nodes if you use multi_host mode.

To check if IP forwarding is enabled, run:

$ cat /proc/sys/net/ipv4/ip_forward 0

Alternatively, run:

$ sysctl net.ipv4.ip_forward net.ipv4.ip_forward = 0

In these examples, IP forwarding is disabled.

To enable IP forwarding dynamically, run:

# sysctl -w net.ipv4.ip_forward=1

Alternatively, run:

# echo 1 > /proc/sys/net/ipv4/ip_forward

To make the changes permanent, edit the /etc/sysctl.conf file and

update the IP forwarding setting:

net.ipv4.ip_forward = 1Save the file and run this command to apply the changes:

# sysctl -p

You can also apply the changes by restarting the network service:

on Ubuntu, Debian:

# /etc/init.d/networking restart

on RHEL, Fedora, CentOS, openSUSE and SLES:

# service network restart

5.3.6.3 Create a list of available floating IP addresses #

Compute maintains a list of floating IP addresses that are available for

assigning to instances. Use the nova-manage floating commands

to perform floating IP operations:

Add entries to the list:

# nova-manage floating create --pool nova --ip_range 68.99.26.170/31

List the floating IP addresses in the pool:

# openstack floating ip list

Create specific floating IPs for either a single address or a subnet:

# nova-manage floating create --pool POOL_NAME --ip_range CIDR

Remove floating IP addresses using the same parameters as the create command:

# openstack floating ip delete CIDR

For more information about how administrators can associate floating IPs with instances, see Manage IP addresses in the OpenStack Administrator Guide.

5.3.6.4 Automatically add floating IPs #

You can configure nova-network to automatically allocate and assign a

floating IP address to virtual instances when they are launched. Add

this line to the /etc/nova/nova.conf file:

auto_assign_floating_ip=TrueSave the file, and restart nova-network

If this option is enabled, but all floating IP addresses have

already been allocated, the openstack server create

command will fail.

5.3.7 Remove a network from a project #

You cannot delete a network that has been associated to a project. This section describes the procedure for dissociating it so that it can be deleted.

In order to disassociate the network, you will need the ID of the project it has been associated to. To get the project ID, you will need to be an administrator.

Disassociate the network from the project using the

nova-manage project scrub command,

with the project ID as the final parameter:

# nova-manage project scrub --project ID

5.3.8 Multiple interfaces for instances (multinic) #

The multinic feature allows you to use more than one interface with your instances. This is useful in several scenarios:

SSL Configurations (VIPs)

Services failover/HA

Bandwidth Allocation

Administrative/Public access to your instances

Each VIP represents a separate network with its own IP block. Every network mode has its own set of changes regarding multinic usage:

5.3.8.1 Using multinic #

In order to use multinic, create two networks, and attach them to the

project (named project on the command line):

$ nova network-create first-net --fixed-range-v4 20.20.0.0/24 --project-id $your-project $ nova network-create second-net --fixed-range-v4 20.20.10.0/24 --project-id $your-project

Each new instance will now receive two IP addresses from their respective DHCP servers:

$ openstack server list +---------+----------+--------+-----------------------------------------+------------+ |ID | Name | Status | Networks | Image Name | +---------+----------+--------+-----------------------------------------+------------+ | 1234... | MyServer | ACTIVE | network2=20.20.0.3; private=20.20.10.14 | cirros | +---------+----------+--------+-----------------------------------------+------------+

Make sure you start the second interface on the instance, or it won't be reachable through the second IP.

This example demonstrates how to set up the interfaces within the instance. This is the configuration that needs to be applied inside the image.

Edit the /etc/network/interfaces file:

# The loopback network interface

auto lo

iface lo inet loopback

auto eth0

iface eth0 inet dhcp

auto eth1

iface eth1 inet dhcpIf the Virtual Network Service Neutron is installed, you can specify the

networks to attach to the interfaces by using the --nic flag with

the openstack server create command:

$ openstack server create --image ed8b2a37-5535-4a5f-a615-443513036d71 \ --flavor 1 --nic net-id=NETWORK1_ID --nic net-id=NETWORK2_ID test-vm1

5.3.9 Troubleshooting Networking #

5.3.9.1 Cannot reach floating IPs #

Problem#

You cannot reach your instances through the floating IP address.

Solution#

Check that the default security group allows ICMP (ping) and SSH (port 22), so that you can reach the instances:

$ openstack security group rule list default +--------------------------------------+-------------+-----------+-----------------+-----------------------+ | ID | IP Protocol | IP Range | Port Range | Remote Security Group | +--------------------------------------+-------------+-----------+-----------------+-----------------------+ | 63536865-e5b6-4df1-bac5-ca6d97d8f54d | tcp | 0.0.0.0/0 | 22:22 | None | | e9d3200f-647a-4293-a9fc-e65ceee189ae | icmp | 0.0.0.0/0 | type=1:code=-1 | None | +--------------------------------------+-------------+-----------+-----------------+-----------------------+

Check the NAT rules have been added to iptables on the node that is running

nova-network:# iptables -L -nv -t nat -A nova-network-PREROUTING -d 68.99.26.170/32 -j DNAT --to-destination 10.0.0.3 -A nova-network-floating-snat -s 10.0.0.3/32 -j SNAT --to-source 68.99.26.170

Check that the public address (

68.99.26.170in this example), has been added to your public interface. You should see the address in the listing when you use theip addrcommand:$ ip addr 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP qlen 1000 link/ether xx:xx:xx:17:4b:c2 brd ff:ff:ff:ff:ff:ff inet 13.22.194.80/24 brd 13.22.194.255 scope global eth0 inet 68.99.26.170/32 scope global eth0 inet6 fe80::82b:2bf:fe1:4b2/64 scope link valid_lft forever preferred_lft forever

NoteYou cannot use

SSHto access an instance with a public IP from within the same server because the routing configuration does not allow it.Use

tcpdumpto identify if packets are being routed to the inbound interface on the compute host. If the packets are reaching the compute hosts but the connection is failing, the issue may be that the packet is being dropped by reverse path filtering. Try disabling reverse-path filtering on the inbound interface. For example, if the inbound interface iseth2, run:# sysctl -w net.ipv4.conf.ETH2.rp_filter=0

If this solves the problem, add the following line to

/etc/sysctl.confso that the reverse-path filter is persistent:net.ipv4.conf.rp_filter=0

5.3.9.2 Temporarily disable firewall #

Problem#

Networking issues prevent administrators accessing or reaching VM's through various pathways.

Solution#

You can disable the firewall by setting this option

in /etc/nova/nova.conf:

firewall_driver=nova.virt.firewall.NoopFirewallDriver5.3.9.3 Packet loss from instances to nova-network server (VLANManager mode) #

Problem#

If you can access your instances with SSH but the network to your instance

is slow, or if you find that running certain operations are slower than

they should be (for example, sudo), packet loss could be occurring

on the connection to the instance.

Packet loss can be caused by Linux networking configuration settings

related to bridges. Certain settings can cause packets to be dropped

between the VLAN interface (for example, vlan100) and the associated

bridge interface (for example, br100) on the host running

nova-network.

Solution#

One way to check whether this is the problem is to open three terminals and run the following commands:

In the first terminal, on the host running

nova-network, usetcpdumpon the VLAN interface to monitor DNS-related traffic (UDP, port 53). As root, run:# tcpdump -K -p -i vlan100 -v -vv udp port 53

In the second terminal, also on the host running

nova-network, usetcpdumpto monitor DNS-related traffic on the bridge interface. As root, run:# tcpdump -K -p -i br100 -v -vv udp port 53

In the third terminal, use

SSHto access the instance and generate DNS requests by using thenslookupcommand:$ nslookup www.google.com

The symptoms may be intermittent, so try running

nslookupmultiple times. If the network configuration is correct, the command should return immediately each time. If it is not correct, the command hangs for several seconds before returning.If the

nslookupcommand sometimes hangs, and there are packets that appear in the first terminal but not the second, then the problem may be due to filtering done on the bridges. Try disabling filtering, and running these commands as root:# sysctl -w net.bridge.bridge-nf-call-arptables=0 # sysctl -w net.bridge.bridge-nf-call-iptables=0 # sysctl -w net.bridge.bridge-nf-call-ip6tables=0

If this solves your issue, add the following line to

/etc/sysctl.confso that the changes are persistent:net.bridge.bridge-nf-call-arptables=0 net.bridge.bridge-nf-call-iptables=0 net.bridge.bridge-nf-call-ip6tables=0

5.3.9.4 KVM: Network connectivity works initially, then fails #

Problem#

With KVM hypervisors, instances running Ubuntu 12.04 sometimes lose network connectivity after functioning properly for a period of time.

Solution#

Try loading the vhost_net kernel module as a workaround for this

issue (see bug

#997978)

. This kernel module may also improve network

performance on KVM. To load

the kernel module:

# modprobe vhost_net

Loading the module has no effect on running instances.

5.4 System administration #

To effectively administer compute, you must understand how the different installed nodes interact with each other. Compute can be installed in many different ways using multiple servers, but generally multiple compute nodes control the virtual servers and a cloud controller node contains the remaining Compute services.

The Compute cloud works using a series of daemon processes named nova-*

that exist persistently on the host machine. These binaries can all run

on the same machine or be spread out on multiple boxes in a large

deployment. The responsibilities of services and drivers are:

Services

-

nova-api receives XML requests and sends them to the rest of the system. A WSGI app routes and authenticates requests. Supports the EC2 and OpenStack APIs. A

nova.confconfiguration file is created when Compute is installed.-

nova-cert manages certificates.

-

nova-compute manages virtual machines. Loads a Service object, and exposes the public methods on ComputeManager through a Remote Procedure Call (RPC).

-

nova-conductor provides database-access support for compute nodes (thereby reducing security risks).

-

nova-consoleauth manages console authentication.

-

nova-objectstore a simple file-based storage system for images that replicates most of the S3 API. It can be replaced with OpenStack Image service and either a simple image manager or OpenStack Object Storage as the virtual machine image storage facility. It must exist on the same node as

nova-compute.-

nova-network manages floating and fixed IPs, DHCP, bridging and VLANs. Loads a Service object which exposes the public methods on one of the subclasses of NetworkManager. Different networking strategies are available by changing the

network_managerconfiguration option toFlatManager,FlatDHCPManager, orVLANManager(defaults toVLANManagerif nothing is specified).-

nova-scheduler dispatches requests for new virtual machines to the correct node.

-

nova-novncproxy provides a VNC proxy for browsers, allowing VNC consoles to access virtual machines.

Some services have drivers that change how the service implements

its core functionality. For example, the nova-compute service

supports drivers that let you choose which hypervisor type it can

use. nova-network and nova-scheduler also have drivers.

5.4.1 Manage Compute users #

Access to the Euca2ools (ec2) API is controlled by an access key and a secret key. The user's access key needs to be included in the request, and the request must be signed with the secret key. Upon receipt of API requests, Compute verifies the signature and runs commands on behalf of the user.

To begin using Compute, you must create a user with the Identity service.

5.4.2 Manage volumes #

Depending on the setup of your cloud provider, they may give you an

endpoint to use to manage volumes, or there may be an extension under

the covers. In either case, you can use the openstack CLI to manage

volumes.

|

Command |

Description |

|---|---|

|

server add volume |

Attach a volume to a server. |

|

volume create |

Add a new volume. |

|

volume delete |

Remove or delete a volume. |

|

server remove volume |

Detach or remove a volume from a server. |

|

volume list |

List all the volumes. |

|

volume show |

Show details about a volume. |

|

snapshot create |

Add a new snapshot. |

|

snapshot delete |

Remove a snapshot. |

|

snapshot list |

List all the snapshots. |

|

snapshot show |

Show details about a snapshot. |

|

volume type create |

Create a new volume type. |

|

volume type delete |

Delete a specific flavor |

|

volume type list |

Print a list of available 'volume types'. |

For example, to list IDs and names of volumes, run:

$ openstack volume list +--------+--------------+-----------+------+-------------+ | ID | Display Name | Status | Size | Attached to | +--------+--------------+-----------+------+-------------+ | 86e6cb | testnfs | available | 1 | | | e389f7 | demo | available | 1 | | +--------+--------------+-----------+------+-------------+

5.4.3 Flavors #

Admin users can use the openstack flavor command to customize and

manage flavors. To see information for this command, run:

$ openstack flavor --help Command "flavor" matches: flavor create flavor delete flavor list flavor set flavor show flavor unset

Configuration rights can be delegated to additional users by redefining the access controls for

compute_extension:flavormanagein/etc/nova/policy.jsonon thenova-apiserver.The Dashboard simulates the ability to modify a flavor by deleting an existing flavor and creating a new one with the same name.

Flavors define these elements:

|

Element |

Description |

|---|---|

|

Name |

A descriptive name. XX.SIZE_NAME is typically not required, though some third party tools may rely on it. |

|

Memory MB |

Instance memory in megabytes. |

|

Disk |

Virtual root disk size in gigabytes. This is an ephemeral disk that the base image is copied into. When booting from a persistent volume it is not used. The "0" size is a special case which uses the native base image size as the size of the ephemeral root volume. |

|

Ephemeral |

Specifies the size of a secondary ephemeral data disk. This

is an empty, unformatted disk and exists only for the life of the instance. Default value is |

|

Swap |

Optional swap space allocation for the instance. Default

value is |

|

VCPUs |

Number of virtual CPUs presented to the instance. |

|

RXTX Factor |

Optional property allows created servers to have a different

bandwidth cap than that defined in the network they are attached to. This factor is multiplied by the rxtx_base property of the network. Default value is |

|

Is Public |

Boolean value, whether flavor is available to all users or private to the project it was created in. Defaults to |

|

Extra Specs |

Key and value pairs that define on which compute nodes a flavor can run. These pairs must match corresponding pairs on the compute nodes. Use to implement special resources, such as flavors that run on only compute nodes with GPU hardware. |

Flavor customization can be limited by the hypervisor in use. For example the libvirt driver enables quotas on CPUs available to a VM, disk tuning, bandwidth I/O, watchdog behavior, random number generator device control, and instance VIF traffic control.

5.4.3.1 Is Public #

Flavors can be assigned to particular projects. By default, a flavor is public and available to all projects. Private flavors are only accessible to those on the access list and are invisible to other projects. To create and assign a private flavor to a project, run this command:

$ openstack flavor create --private p1.medium --id auto --ram 512 --disk 40 --vcpus 4

5.4.3.2 Extra Specs #

- CPU limits

You can configure the CPU limits with control parameters with the

novaclient. For example, to configure the I/O limit, use:$ openstack flavor set FLAVOR-NAME \ --property quota:read_bytes_sec=10240000 \ --property quota:write_bytes_sec=10240000Use these optional parameters to control weight shares, enforcement intervals for runtime quotas, and a quota for maximum allowed bandwidth:

cpu_shares: Specifies the proportional weighted share for the domain. If this element is omitted, the service defaults to the OS provided defaults. There is no unit for the value; it is a relative measure based on the setting of other VMs. For example, a VM configured with value 2048 gets twice as much CPU time as a VM configured with value 1024.cpu_shares_level: On VMware, specifies the allocation level. Can becustom,high,normal, orlow. If you choosecustom, set the number of shares usingcpu_shares_share.cpu_period: Specifies the enforcement interval (unit: microseconds) for QEMU and LXC hypervisors. Within a period, each VCPU of the domain is not allowed to consume more than the quota worth of runtime. The value should be in range[1000, 1000000]. A period with value 0 means no value.cpu_limit: Specifies the upper limit for VMware machine CPU allocation in MHz. This parameter ensures that a machine never uses more than the defined amount of CPU time. It can be used to enforce a limit on the machine's CPU performance.cpu_reservation: Specifies the guaranteed minimum CPU reservation in MHz for VMware. This means that if needed, the machine will definitely get allocated the reserved amount of CPU cycles.cpu_quota: Specifies the maximum allowed bandwidth (unit: microseconds). A domain with a negative-value quota indicates that the domain has infinite bandwidth, which means that it is not bandwidth controlled. The value should be in range[1000, 18446744073709551]or less than 0. A quota with value 0 means no value. You can use this feature to ensure that all vCPUs run at the same speed. For example:$ openstack flavor set FLAVOR-NAME \ --property quota:cpu_quota=10000 \ --property quota:cpu_period=20000In this example, an instance of

FLAVOR-NAMEcan only consume a maximum of 50% CPU of a physical CPU computing capability.

- Memory limits

For VMware, you can configure the memory limits with control parameters.

Use these optional parameters to limit the memory allocation, guarantee minimum memory reservation, and to specify shares used in case of resource contention:

memory_limit: Specifies the upper limit for VMware machine memory allocation in MB. The utilization of a virtual machine will not exceed this limit, even if there are available resources. This is typically used to ensure a consistent performance of virtual machines independent of available resources.memory_reservation: Specifies the guaranteed minimum memory reservation in MB for VMware. This means the specified amount of memory will definitely be allocated to the machine.memory_shares_level: On VMware, specifies the allocation level. This can becustom,high,normalorlow. If you choosecustom, set the number of shares usingmemory_shares_share.memory_shares_share: Specifies the number of shares allocated in the event thatcustomis used. There is no unit for this value. It is a relative measure based on the settings for other VMs. For example:$ openstack flavor set FLAVOR-NAME \ --property quota:memory_shares_level=custom \ --property quota:memory_shares_share=15

- Disk I/O limits

For VMware, you can configure the resource limits for disk with control parameters.

Use these optional parameters to limit the disk utilization, guarantee disk allocation, and to specify shares used in case of resource contention. This allows the VMware driver to enable disk allocations for the running instance.

disk_io_limit: Specifies the upper limit for disk utilization in I/O per second. The utilization of a virtual machine will not exceed this limit, even if there are available resources. The default value is -1 which indicates unlimited usage.disk_io_reservation: Specifies the guaranteed minimum disk allocation in terms of Input/Output Operations Per Second (IOPS).disk_io_shares_level: Specifies the allocation level. This can becustom,high,normalorlow. If you choose custom, set the number of shares usingdisk_io_shares_share.disk_io_shares_share: Specifies the number of shares allocated in the event thatcustomis used. When there is resource contention, this value is used to determine the resource allocation.The example below sets the

disk_io_reservationto 2000 IOPS.$ openstack flavor set FLAVOR-NAME \ --property quota:disk_io_reservation=2000

- Disk tuning

Using disk I/O quotas, you can set maximum disk write to 10 MB per second for a VM user. For example:

$ openstack flavor set FLAVOR-NAME \ --property quota:disk_write_bytes_sec=10485760The disk I/O options are:

disk_read_bytes_secdisk_read_iops_secdisk_write_bytes_secdisk_write_iops_secdisk_total_bytes_secdisk_total_iops_sec

- Bandwidth I/O

The vif I/O options are:

vif_inbound_averagevif_inbound_burstvif_inbound_peakvif_outbound_averagevif_outbound_burstvif_outbound_peak

Incoming and outgoing traffic can be shaped independently. The bandwidth element can have at most, one inbound and at most, one outbound child element. If you leave any of these child elements out, no Quality of Service (QoS) is applied on that traffic direction. So, if you want to shape only the network's incoming traffic, use inbound only (and vice versa). Each element has one mandatory attribute average, which specifies the average bit rate on the interface being shaped.

There are also two optional attributes (integer):

peak, which specifies the maximum rate at which a bridge can send data (kilobytes/second), andburst, the amount of bytes that can be burst at peak speed (kilobytes). The rate is shared equally within domains connected to the network.The example below sets network traffic bandwidth limits for existing flavor as follows:

Outbound traffic:

average: 262 Mbps (32768 kilobytes/second)

peak: 524 Mbps (65536 kilobytes/second)

burst: 65536 kilobytes

Inbound traffic:

average: 262 Mbps (32768 kilobytes/second)

peak: 524 Mbps (65536 kilobytes/second)

burst: 65536 kilobytes

$ openstack flavor set FLAVOR-NAME \ --property quota:vif_outbound_average=32768 \ --property quota:vif_outbound_peak=65536 \ --property quota:vif_outbound_burst=65536 \ --property quota:vif_inbound_average=32768 \ --property quota:vif_inbound_peak=65536 \ --property quota:vif_inbound_burst=65536NoteAll the speed limit values in above example are specified in kilobytes/second. And burst values are in kilobytes. Values were converted using 'Data rate units on Wikipedia <https://en.wikipedia.org/wiki/Data_rate_units>`_.

- Watchdog behavior

For the libvirt driver, you can enable and set the behavior of a virtual hardware watchdog device for each flavor. Watchdog devices keep an eye on the guest server, and carry out the configured action, if the server hangs. The watchdog uses the i6300esb device (emulating a PCI Intel 6300ESB). If

hw:watchdog_actionis not specified, the watchdog is disabled.To set the behavior, use:

$ openstack flavor set FLAVOR-NAME --property hw:watchdog_action=ACTION

Valid ACTION values are:

disabled: (default) The device is not attached.reset: Forcefully reset the guest.poweroff: Forcefully power off the guest.pause: Pause the guest.none: Only enable the watchdog; do nothing if the server hangs.

NoteWatchdog behavior set using a specific image's properties will override behavior set using flavors.

- Random-number generator

If a random-number generator device has been added to the instance through its image properties, the device can be enabled and configured using:

$ openstack flavor set FLAVOR-NAME \ --property hw_rng:allowed=True \ --property hw_rng:rate_bytes=RATE-BYTES \ --property hw_rng:rate_period=RATE-PERIODWhere:

RATE-BYTES: (integer) Allowed amount of bytes that the guest can read from the host's entropy per period.

RATE-PERIOD: (integer) Duration of the read period in seconds.

- CPU topology

For the libvirt driver, you can define the topology of the processors in the virtual machine using properties. The properties with

maxlimit the number that can be selected by the user with image properties.$ openstack flavor set FLAVOR-NAME \ --property hw:cpu_sockets=FLAVOR-SOCKETS \ --property hw:cpu_cores=FLAVOR-CORES \ --property hw:cpu_threads=FLAVOR-THREADS \ --property hw:cpu_max_sockets=FLAVOR-SOCKETS \ --property hw:cpu_max_cores=FLAVOR-CORES \ --property hw:cpu_max_threads=FLAVOR-THREADSWhere:

FLAVOR-SOCKETS: (integer) The number of sockets for the guest VM. By default, this is set to the number of vCPUs requested.